ClosedAI

What was the cause of the drama at OpenAI? Plus: Digital Marketing News & Music Monday

It turns out it is a lot harder than I imagined to have a full-time gig and write a book at the same time.

It’s been more than a month and a half since I last posted. During that time I had two digital marketing team members leave our agency.

While I am happy for them and take satisfaction in their continued success, it left me scrambling to address their workload, and sent me down the path of finding the talent to replace them.

Thankfully, we had an embarrassment of riches in our search and now have some very talented new interns on board.

So that was taking a lot of my time and then throw in trying to stay current with all of the developments in AI while the world at large is burning; let’s just say my focus was elsewhere.

But a lot has happened in the meantime and the craziest of it has happened during the past week or so at the organization that created ChatGPT.

It is very important that society thinks about and addresses issues about AI sooner than later, so stick with me. We have a lot to cover.

Let’s get to it.

Not-So OpenAI

If you subscribe to The Reputation Algorithm, you are far more likely than not to at least be aware that the most prominent AI organization, OpenAI, recently fired its CEO Sam Altman under mysterious circumstances.

I’m not inclined to pay much attention to corporate gossip or stories dealing with company in-fighting but the news of Altman’s dismissal was significantly different for a variety of reasons:

First, OpenAI is one of the primary if not the leader in artificial intelligence development.

Second, the reasons given for Altman’s firing were as clear as mud.

And third, OpenAI’s mission and the nature of its organizational structure gave rise to speculation over whether or not there is something we all should worry about.

OpenAI’s Mission

OpenAI describes itself as an AI research and deployment company and states its mission “is to ensure that artificial general intelligence benefits all of humanity” and “to ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity.”

Here’s a screenshot of the organization’s About page, in case they decide to change their mission at some point (remember when Google’s motto was “Don’t be evil”?):

Wikipedia defines AGI as a non-human system that could learn to accomplish any intellectual achievement humans are capable of or any “autonomous system that surpasses human capabilities in the majority of economically valuable tasks.”

On its December 11, 2015 blog post announcing the creation of OpenAI, Greg Brockman and Ilya Sutskever state:

Because of AI’s surprising history, it’s hard to predict when human-level AI might come within reach. When it does, it’ll be important to have a leading research institution which can prioritize a good outcome for all over its own self-interest.

We’re hoping to grow OpenAI into such an institution. As a non-profit, our aim is to build value for everyone rather than shareholders. Researchers will be strongly encouraged to publish their work, whether as papers, blog posts, or code, and our patents (if any) will be shared with the world. We’ll freely collaborate with others across many institutions and expect to work with companies to research and deploy new technologies.

OpenAI’s Organizational Structure

In the immediate wake of Sam Altman’s firing, Platformer’s Casey Newton kind of dismissed OpenAI’s organizational structure as “a mess.”

It is, if your frame of reference is the structure of a typical startup company and to Newton’s credit, he subsequently revised his opinion. Let’s take a look at why he might label it “a mess.”

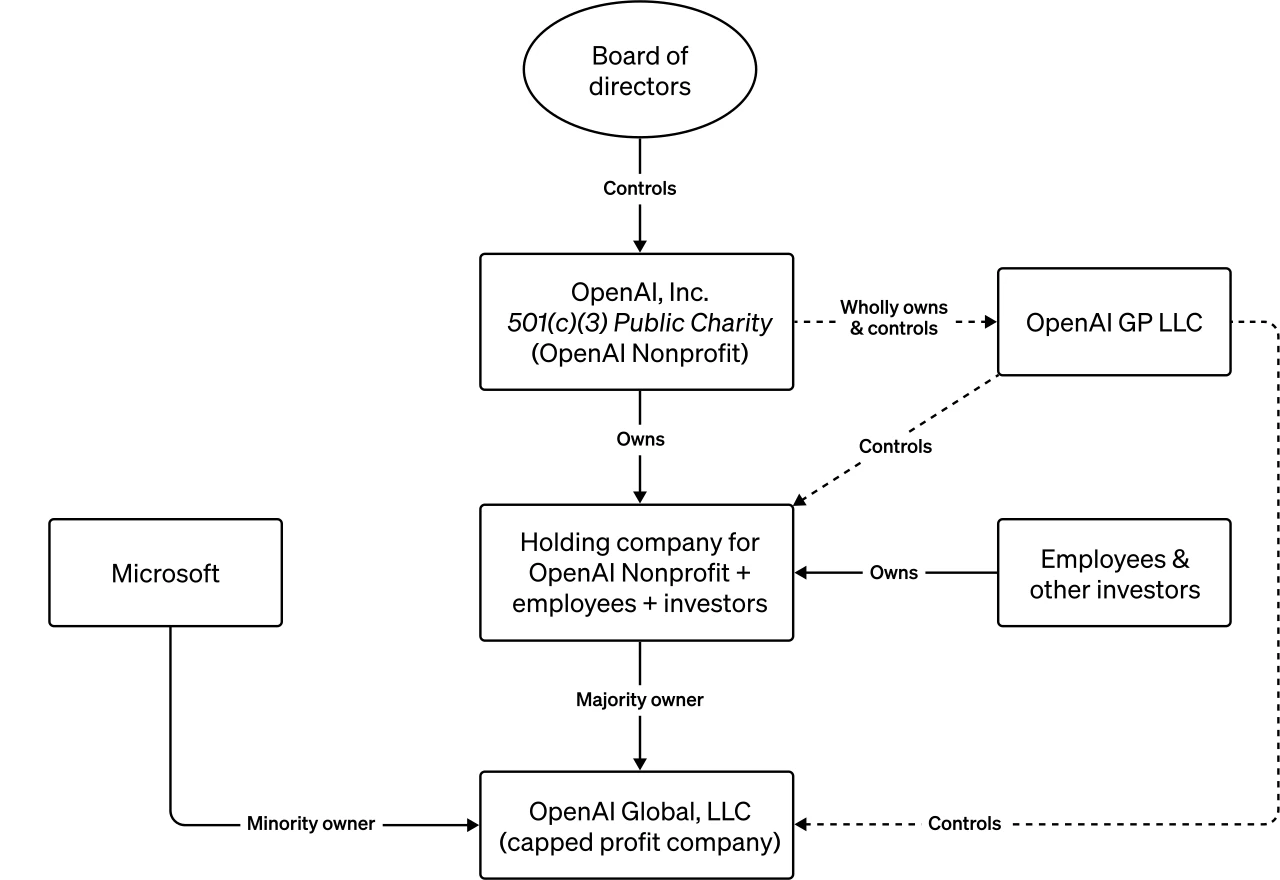

As noted above, OpenAI is created as a 501(c)(3) non-profit research organization. It is governed by a board of directors.

The co-founders included Altman, Greg Brockman and Ilya Sutskever with initial funding from Altman, Brockman, Elon Musk, and Peter Theil, among others.

So far, pretty straightforward, right?

It eventually became apparent that fulfillment of the organization’s mission would require far more than its initial $1 billion in pledges.

In 2019, OpenAI transformed into a “capped” for-profit initiative. It did so by creating a holding company which, in turn, created the capped profit company.

This would allow OpenAI to raise money from venture capitalists and offer employees stakes in the company. Investors’ profit would be capped at 100 times any investment and the arrangement would allow for the commercialization of OpenAI products, like what has happened with ChatGPT and DALL-E.

The capped part of the for-profit company was designed to decrease the pressure to maximize shareholder value. Additionally, the for-profit is legally required to pursue the nonprofit’s mission.

More crucially, though, is the nature of the board of directors of OpenAI. The for-profit subsidiary is fully controlled by the OpenAI non-profit. In OpenAI’s own words: “The Nonprofit’s principal beneficiary is humanity, not OpenAI investors.”

Board members are not allowed to own equity in the for-profit company.

This structure is clearly designed to incentivize acting on behalf of the “benefit to humanity” part of OpenAI’s mission over the typical corporate “maximizing shareholder value” behavior.

To be even more explicit, in OpenAI’s own words again: “the board determines when we've attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.”

This is OpenAI’s visual representation of its organizational structure:

You’ll notice Microsoft there as a minority owner. Microsoft has reportedly invested $13 billion in OpenAI and has a 49% stake in the for-profit.

Sam Altman’s Firing

November 17

On November 17, OpenAI’s chief scientist Ilya Sutskever informed Sam Altman that he was being fired, setting off a series of events that would embroil the organization in turmoil and a highly-public intra-organizational fight.

OpenAI simultaneously published a blog post announcing the firing, stating:

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

The post also stated:

OpenAI was deliberately structured to advance our mission: to ensure that artificial general intelligence benefits all humanity. The board remains fully committed to serving this mission.

The lack of specific reasons for Altman’s firing left an opening for speculation to fill the void.

Greg Brockman was removed from his seat as chair of the board but was retained as an employee, according to the post. Brockman quickly resigned in solidarity with Altman, as did three senior researchers.

The New York Times’ Kevin Roose reported that:

An all-hands meeting for OpenAI employees on Friday afternoon didn’t reveal much more. Ilya Sutskever, the company’s chief scientist and a member of its board, defended the ouster, according to a person briefed on his remarks. He dismissed employees’ suggestions that pushing Mr. Altman out amounted to a “hostile takeover” and claimed it was necessary to protect OpenAI’s mission of making artificial intelligence beneficial to humanity, the person said. [Emphasis mine.]

In October, Sutskever sat down for an interview with Will Douglas Heaven at MIT Technology Review. Among the topics they discussed were:

Instead of building the next GPT or image maker DALL-E, Sutskever tells me his new priority is to figure out how to stop an artificial superintelligence (a hypothetical future technology he sees coming with the foresight of a true believer) from going rogue.

Sutskever tells me a lot of other things too. He thinks ChatGPT just might be conscious (if you squint). He thinks the world needs to wake up to the true power of the technology his company and others are racing to create. And he thinks some humans will one day choose to merge with machines.

A lot of what Sutskever says is wild. But not nearly as wild as it would have sounded just one or two years ago. As he tells me himself, ChatGPT has already rewritten a lot of people’s expectations about what’s coming, turning “will never happen” into “will happen faster than you think.”

“It’s important to talk about where it’s all headed,” he says, before predicting the development of artificial general intelligence (by which he means machines as smart as humans) as if it were as sure a bet as another iPhone: “At some point we really will have AGI. Maybe OpenAI will build it. Maybe some other company will build it.”

November 18

The next day, Axios reported that an internal OpenAI memo from COO Brad Lightcap said:

"We can say definitively that the board's decision was not made in response to malfeasance or anything related to our financial, business, safety, or security/privacy practices. This was a breakdown in communication between Sam and the board."

This kind of removed the idea that Altman was fired for what CEOs typically get fired for, malfeasance, but also kinda contradicts the implication the board made that Altman’s firing had to do with the safety of AGI.

November 19

Emmett Shear, the co-founder of Twitch, was appointed interim CEO.

Microsoft published a statement by CEO Satya Nadella:

We look forward to getting to know Emmett Shear and OAI’s new leadership team and working with them. And we’re extremely excited to share the news that Sam Altman and Greg Brockman, together with colleagues, will be joining Microsoft to lead a new advanced AI research team. We look forward to moving quickly to provide them with the resources needed for their success.

November 20

On November 20, The Information reported:

OpenAI’s board of directors approached Dario Amodei, the co-founder and CEO of rival large-language model developer Anthropic, about a potential merger of the two companies, said a person with direct knowledge. The approach…was part of an effort by OpenAI to persuade Amodei to replace Altman as CEO, the person said.

Anthropic was founded in 2021 by the aforementioned Dario Modei and his sister Daniela, among other OpenAI employees. Dario served as OpenAI's Vice President of Research.

The split with OpenAI, according to VentureBeat, was

because of “differences over the group’s direction after it took a landmark $1 billion investment from Microsoft in 2019.”

Specifically, over the fear of “industrial capture,” or the potential for one corporation to control such a powerful technology.

Amodei declined OpenAI’s offer.

That same day, more than 650 of the reported 770 OpenAI employees threatened to quit if the board didn’t resign and reinstate Altman as CEO, according to Wired.

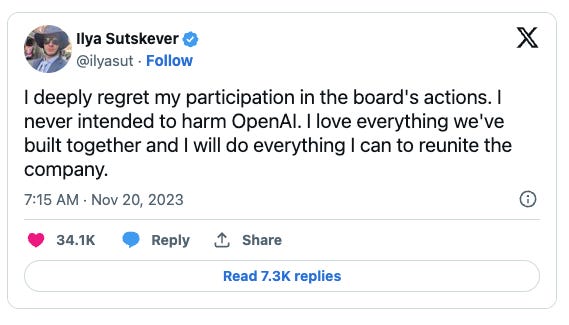

Oddly, Sutskever was among the signatories and then subsequently took it all back in a social media post:

November 21

The New York Times reported:

Before Sam Altman was ousted…he and the company’s board of directors had been bickering for more than a year. The tension got worse as OpenAI became a mainstream name thanks to its popular ChatGPT chatbot.

At one point, Mr. Altman…made a move to push out one of the board’s members because he thought a research paper she had co-written was critical of the company.

Another member, Ilya Sutskever, thought Mr. Altman was not always being honest when talking with the board. And some board members worried that Mr. Altman was too focused on expansion while they wanted to balance that growth with A.I. safety.

The research paper in question was co-authored by OpenAI board member Helen Toner and published by Georgetown University’s Center for Security and Emerging Technology, of which she is director of strategy.

At the center of this saga is a research paper that Toner authored — she openly criticized OpenAI's approach to AI development as far as safety is concerned while praising rival Anthropic, which was founded in 2021 by former senior members of OpenAI, siblings Daniela and Dario Amodei.

While Altman called out Toner for criticizing the company publicly while the Federal Trade Commission was investigating it over data usage, Toner reportedly defended it by saying it was simply academic commentary.

And Sam Altman’s Re-Hiring

In the end, Altman maneuvered his way back to OpenAI.

Shortly after news broke of Altman’s firing, Satya Nadella conducted a media PR blitz in support of Altman. Then he offered Altman and other OpenAI members a new department at Microsoft to continue their work. Throw in the 49% stake Microsoft holds in the for-profit company and employees threatening to bolt en masse and that’s a pretty unbeatable hand Altman was holding.

So he’s back in and most of the old board members are out.

Sutskever is one of them, having read the room and changed his tune.

Composition of OpenAI’s Board

It is important to examine the composition of OpenAI’s board both before and after.

The Ousted Board

Prior to this story breaking, the board looked like this:

Greg Brockman, OpenAI chief technology officer and board chair

Ilya Sutskever, OpenAI chief scientist

Independent directors:

Helen Toner,

Tasha McCauley, technology entrepreneur, and

Adam D’Angelo, Quora CEO

Sutskever, as you have seen previously, has grave worries about the effect AGI may have on the world, worries he shares with Helen Toner.

Kara Swisher said on Twitter:

The issues with Tasha McCauley are deeper and, as described to me by many sources, she has used very apocalyptic terms for her fears of the tech itself and who should and should not have their “fingers on the button.”

So we know where McCauley stands.

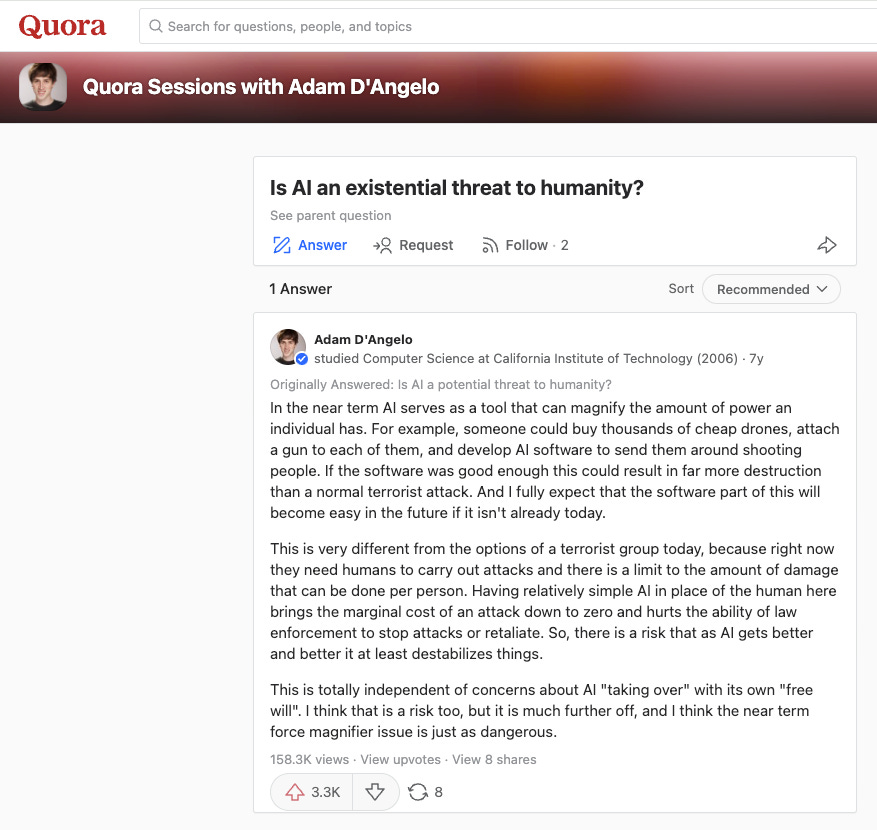

Seven years ago, Adam D’Angelo posted his thoughts on the threat of AI on Quora, stating:

Yet, he remains on the board, apparently due to his long-time friendship with Altman and his role in brokering Altman’s return.

Effective Altruism

The philosophy behind OpenAI’s governing structure is informed by the “effective altruism” movement. Born at Oxford University and embraced by some Silicon Valley elite, the idea is to use evidence and reason to figure out how to benefit others as much as possible, and taking action on that basis.

Sounds reasonable enough but it has lost some of its appeal due to the fact that its most recognized proponent was crypto criminal Sam Bankman-Fried.

Three of the six seats on OpenAI’s board are occupied by people with deep ties to effective altruism: think tank researcher Helen Toner, Quora CEO Adam D’Angelo, and RAND scientist Tasha McCauley. A fourth member, OpenAI co-founder and chief scientist Ilya Sutskever, also holds views on AI that are generally sympathetic to EA.

The Interim Board

OpenAI’s new board consists of:

Adam D’Angelo

Bret Taylor will serve as board chair. The former CEO of Salesforce and was also the former chairman of Twitter.

Lawrence Summers, the former Treasury Secretary and veteran of the Clinton and Obama administrations. Currently president emeritus of Harvard University.

As noted above, D’Angelo has at least some trepidations about the societal harm that could be caused by AI.

Taylor forced the sale of Twitter to Musk after the Mussolini wannabe got cold feet. Taylor founded FriendFeed, which was my favorite Twitter competitor back in the day, until Facebook acquired and killed it.

Summers is a bit of a controversial figure.

As Treasury Secretary, he helped deregulate the derivatives market, which would later play a crucial role in the 2008 financial crash. He’s been ringing alarm bells about a hard landing for the economy since early 2022 and yet the economy has just continued to improve. So his instincts don’t seem especially sharp.

Axios notes what others are saying about Summers:

"There is no greater indication that OpenAI is unserious about the interests of humanity than their elevation of Larry Summers to its Board of Directors," says the Revolving Door Project's Jeff Hauser in a press release.

Journalists, wrote The New Yorker's John Cassidy upon Summers' 2010 departure from the White House, "aren't the only folks Larry considers intellectually beneath him. Such a category would include most members of President Obama's cabinet and their top policy advisers; many of his colleagues in the White House; virtually all foreign officials; ninety per cent of the Harvard faculty; and a similar proportion, or possibly higher, of his fellow academic economists."

Summers certainly has cozied up to Silicon Valley elites in recent years, sitting on the boards of Block and Lending Club and advising the likes or venture capital firms Andreessen Horowitz, according to Axios.

On the other hand, in 2017 he took Trump’s Treasury Secretary Steve Mnuchin to task for saying AI is not even on his radar. In a Washington Post editorial, Summers wrote:

Mnuchin’s comment about the lack of impact of technology on jobs is to economics approximately what global climate change denial is to atmospheric science or what creationism is to biology. Yes, you can debate whether technological change is in net good. I certainly believe it is. And you can debate what the job creation effects will be relative to the job destruction effects. I think this is much less clear, given the downward trends in adult employment, especially for men over the past generation.

But I do not understand how anyone could reach the conclusion that all the action with technology is half a century away. Artificial intelligence is behind autonomous vehicles that will affect millions of jobs driving and dealing with cars within the next 15 years, even on conservative projections. Artificial intelligence is transforming everything from retailing to banking to the provision of medical care. Almost every economist who has studied the question believes that technology has had a greater impact on the wage structure and on employment than international trade and certainly a far greater impact than whatever increment to trade is the result of much debated trade agreements.

While he’s expressed concerns over the risk AI poses over job destruction, he also seems awfully comfortable among tech’s libertarian moguls.

Summers does seem to have some measure of credibility with a variety of stakeholders that are important to OpenAI:

With Silicon Valley set generally and most importantly with Microsoft,

With those concerned about the potential harms AI presents, but, perhaps most importantly,

With the policymakers who will eventually want to regulate the industry.

Keep in mind that this is an interim board, so there will most assuredly be additions and among them I think you could expect someone to represent Microsoft’s interests.

The Board Upheld Its Responsibilities

Here’s the thing, though: The OpenAI board functioned exactly as it was designed to function.

It was designed to put a halt on the development of AGI before safeguards could be put in place to protect humanity, or at least serve as an early-warning system to warn the rest of us of what’s coming.

We still don’t know what exactly was the catalyst for firing Altman but it would appear that’s what they’ve done.

Commercialization Of OpenAI

One line of thought posits that Altman’s push to commercialize OpenAI’s technology in the form of DALL-E, ChatGTP, the ChatGPT API, and most recently, Custom GPTs, was too fast and too soon without accounting for the potential risks unleashing these products to the world posed.

Microsoft Dominance

Another line of thought concerned the 49% relationship with Microsoft which tilted the competitive landscape enormously in their favor (as you could see with the integration of OpenAI technology into Bing, the Microsoft Office Suite, Visual Studio co-pilot, and even LinkedIn), risking one company becoming the dominant player in AI.

Altman’s Side-Hustles

Yet another line of thought had to do with the many side-hustles Altman was involved in. According to Bloomberg:

…Altman's myriad side hustles, including the controversial crypto endeavor Worldcoin, which seeks to scan the eyeballs of every human on earth, or his recent attempt to raise money for an AI chip company to take on Nvidia Corp.

Which could certainly be considered a conflict of interest.

AI Agents

What happens when you give people the power to create AI that interacts with other AI?

If you give people the ability to create their own custom GPTs, then how much of a stretch is it to allow people to create AIs that can talk to other AIs and complete tasks between them?

Sure, there are a lot of benefits to be had from the deployment of autonomous AIs but there is certainly a lot of havoc that can be caused as well.

Q*

The last, and most intriguing and perhaps frightening theory, is that OpenAI was on the brink of an innovation that could conceivably complete the organization’s mission of developing artificial general intelligence.

several staff researchers wrote a letter to the board of directors warning of a powerful artificial intelligence discovery that they said could threaten humanity, two people familiar with the matter told Reuters.

The previously unreported letter and AI algorithm were key developments before the board's ouster of Altman, the poster child of generative AI, the two sources said.

…OpenAI, which declined to comment, acknowledged in an internal message to staffers a project called Q* and a letter to the board before the weekend's events, one of the people said.

…Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters.

And Business Insider reports:

The ability to solve basic math problems may not sound that impressive, but AI experts told Business Insider it would represent a huge leap forward from existing models, which struggle to generalize outside the data they are trained on.

"If it has the ability to logically reason and reason about abstract concepts, which right now is what it really struggles with, that's a pretty tremendous leap," said Charles Higgins, a cofounder of the AI-training startup Tromero who's also a Ph.D. candidate in AI safety. He added, "Maths is about symbolically reasoning — saying, for example, 'If X is bigger than Y and Y is bigger than Z, then X is bigger than Z.' Language models traditionally really struggle at that because they don't logically reason, they just have what are effectively intuitions."

Sophia Kalanovska, a fellow Tromero cofounder and Ph.D. candidate, told BI that Q's name implied it was a combination of two well-known AI techniques, Q-learning and A* search. She said this suggested the new model could combine the deep-learning techniques that power ChatGPT with rules programmed by humans. It's an approach that could help fix the chatbot's hallucination problem.

Brian Wang at NextBigFuture writes:

The battle at OpenAI was possibly due to a massive breakthrough dubbed Q* (Q-learning). Q* is a precursor to AGI. What Q* might have done is bridged a big gap between Q-learning and pre-determined heuristics. This could be revolutionary, as it could give a machine “future sight” into the optimal next step, saving it a lot of effort. This means that machines can stop pursuing suboptimal solutions, and only optimal ones. All the “failure” trials that machines used to have (eg. trying to walk but falling) will just be put into the effort of “success” trials. OpenAI might have found a way to navigate complex problems without running into typical obstacles

If OpenAI has developed a shortcut to logical reasoning (and math is logical reasoning), then it has (or will eventually) conquered a major limitation of Large Language Models (LLMs), which is the ability to do basic math.

While LLMs are great at predicting text—basically pattern recognition—that doesn’t amount to reasoning. The ability to succeed at mathematics is reasoning.

Regardless of what the catalyst was, a majority of the board members believed that the organization under Sam Altman’s leadership was headed down a path in the development of general artificial intelligence that they believed was either dangerous to one degree or another or at least needed a pace of development that allowed for the implementation of safeguards.

Who Controls AI?

So it would appear, as Kevin Roose declared, that A.I. Belongs to the Capitalists Now.

It is hardly shocking that capital has blown through a nonprofit governance structure with relative ease. When there’s billions to be made, woe to the fortunes of anyone who stands in a self-respecting titan of industry’s way.

We’ve seen this movie before but in the past the exploitation of the resources those titans of industry had at their disposal were limited. You can only build so many oil drills or lay so many miles of railroad tracks or construct so many steel mills.

The titans of the information age have no such physical restrictions. Their produce is information. It is ever renewing and infintely expanding.

We can talk about open source as a countervailing force to corporate AI development, but with the resources of a Microsoft or a Facebook or an Amazon at their disposal, do you seriously think open source can be the check to balance corporate power?

Many a science fiction dystopian plot revolves around the supremacy of corporations as the primary governing entities in society.

So we need to ask ourselves: Is it wise for such a powerful technology as AI (nevermind general artificial intelligence) to be concentrated in the hands of just a few corporate entities?

Especially, as we’ve learned from the age of social media, absent meaningful regulation?

Digital Marketing News

Artificial Intelligence

The Guardian by Douglas Rushkoff - ‘We will coup whoever we want!’: the unbearable hubris of Musk and the billionaire tech bros - Challenging each other to cage fights, building apocalypse bunkers – the behaviour of today’s mega-moguls is becoming increasingly outlandish and imperial

Unlike their forebears, contemporary billionaires do not hope to build the biggest house in town, but the biggest colony on the moon. In contrast, however avaricious, the titans of past gilded eras still saw themselves as human members of civil society. Contemporary billionaires appear to understand civics and civilians as impediments to their progress, necessary victims of the externalities of their companies’ growth, sad artefacts of the civilisation they will leave behind in their inexorable colonisation of the next dimension. Unlike their forebears, they do not hope to build the biggest house in town, but the biggest underground lair in New Zealand, colony on the moon or Mars or virtual reality server in the cloud.

While plans for Peter Thiel’s 193-hectare (477-acre) “doomsday” escape, complete with spa, theatre, meditation lounge and library, were ultimately rejected on environmental grounds, he still wants to build a startup community that floats on the ocean, where so-called seasteaders can live beyond government regulation as well as whatever disasters may befall us back on the continents.

Keep in mind—especially in the wake of the OpenAI drama—the fundamental weirdness of some of these tech bros.

The Artificial Corner by Pycoach - OpenAI Is Slowly Killing Prompt Engineering With The Latest ChatGPT and DALL-E Updates - When OpenAI introduced GPTs they presented them as a new way to create custom versions of ChatGPT without coding and with even chances to monetize them on the GPT store.

Something that was overlooked, though, was some of the functionalities of GPT Builder. GPT Builder is an assistant that helps us automatically configure a GPT, which has “instructions” as a main component.

What are instructions? They’re just prompts. Those that we manually crafted until now.

Experience ChatGPT users frequently use prompting techniques in their prompts. An example of a prompting technique is role prompting.

Act as a job interviewer. I’ll be the candidate and you’ll ask me interview questions for the X position …

GPT Builder is not only able to automatically generate prompts for us but apply some prompting techniques as well. We only have to give it an idea and it’ll craft a complete prompt for us.

All this is done via a chat with GPT Builder.

This was inevitable. I’ve been playing around building my own custom GPTs with OpenAI’s GPT Builder. It is pretty impressive technology with some frustrating limitations, too. I would expect that will change as updates to the GPT Builder are released. But this is another topic for another post. Stay tuned. Meanwhile:

The Short, Happy Life Of A Prompt Engineer

Generative AI is already creating a new job title: Prompt Engineer. And there’s a lot of interest in it.

Artificial Intelligence in Plain English by Anthony Alcaraz - How “Memories” Can Make Chatbots Smarter and More Personalized - Exciting new research from CMU and Allen Institute shows how giving chatbots a “memory” could make them smarter and more personalized over time.

The paper “MemPrompt: Memory-assisted Prompt Editing with User Feedback” proposes a novel method called MemPrompt that allows chatbots like large language models (LLMs) such as GPT-3 to continuously improve through interactive feedback from users, without needing full retraining. This represents a technique in natural language processing that can enhance chatbots’ language understanding and generation capabilities…

MemPrompt provides a novel solution — give the chatbot a memory that can store clarifying feedback from users when the chatbot misunderstands their intent.

For a new user query, MemPrompt first checks if this chatbot has misunderstood a similar question in the past. If so, the clarifying feedback is retrieved from the memory and appended to the current query. This additional context helps the chatbot interpret the user’s intent correctly by preventing it from repeating past mistakes.

This is a fascinating area of AI development and one that I’ll address in more detail soon in a subsequent post. The lack of a memory across sessions is one of the most striking limitations of existing AI chatbots. Here’s the link to the aforementioned paper [PDF].

VentureBeat by Carl Franzen - Midjourney’s new style tuner is here. Here’s how to use it. - Before style tuning, users had to repeat their text descriptions to generate consistent styles across multiple images — and even this was no guarantee, since Midjourney, like most AI art generators, is built to offer a functionally infinite variety of image styles and types.

Now instead, of relying on their language, users can select between a variety of styles and obtain a code to apply to all their works going forward, keeping them in the same aesthetic family. Midjourney users can also elect to copy and paste their code elsewhere to save it and reference it going forward, or even share it with other Midjourney users in their organization to allow them to generate images in that same style. This is huge for enterprises, brands, and anyone seeking to work on group creative projects in a unified style.

Haven’t tried this yet, mostly because it doesn’t fit with what I’m trying to get out of Midjourney and I’ve defined the style I use but it does look pretty cool.

The Verge by Allison Johnson - Bard can now watch YouTube videos for you - Bard’s YouTube extension can now handle complex queries about specific video content, like recipe quantities and instruction summaries.

This will save time but may pose significant problems for marketers. We’ll have to think about video production in a new light with this new capability.

Public Relations

The Guardian by Adam Lowenstein - Revealed: how top PR firm uses ‘trust barometer’ to promote world’s autocrats - Edelman, world’s largest public relations company, paid millions by Saudi Arabia, UAE and other repressive regimes

For years, Edelman has reported that citizens of authoritarian countries, including Saudi Arabia, Singapore, the United Arab Emirates and China, tend to trust their governments more than people living in democracies do.

But Edelman has been less forthcoming about the fact that some of these same authoritarian governments have also been its clients. Edelman’s work for one such client – the government of the UAE – will be front and center when world leaders convene in Dubai later this month for the UN’s Cop28 climate summit.

The Guardian and Aria, a non-profit research organization, analyzed Edelman trust barometers, as well as Foreign Agent Registration Act (Fara) filings made public by the Department of Justice, dating back to 2001, when Edelman released its first survey of trust. (The act requires US companies to publish certain information about their lobbying and advocacy work for foreign governments.) During that time Edelman and its subsidiaries have been paid millions of dollars by autocratic governments to develop and promote their desired images and narratives.

Polling experts have found that public opinion surveys tend to overstate the favorability of authoritarian regimes because many respondents fear government reprisal. That hasn’t stopped these same governments from exploiting Edelman’s findings to burnish their reputations and legitimize their holds on power.

Booooo! Looks like Edelman might need a PR firm.

Search Engines

Search Engine Land by Barry Schwartz - Google updates search quality raters guidelines - Although search quality evaluators’ ratings do not directly impact rankings, they do provide feedback that helps Google improve its algorithms. It is important to spend some time looking at what Google changed in this updated version of the document and compare that to the previous version of the document to see if we can learn more about Google’s intent on what websites and web pages Google prefers to rank. Google made those additions, edits, and deletions for a reason.

If you want to get a sense of how Google rates the reputation of a given web page, read the company’s search quality raters guidelines.

Social Media

Meta by Nick Clegg - New Tools to Support Independent Research - To understand the impact social media apps like Facebook and Instagram have on the world, it’s important to support rigorous, independent research. That’s why Meta has been committed to an open and privacy-protective approach to research for many years, including making tools available to support public interest research, such as the US 2020 studies.

Over the past few months we gave Beta access to our new Meta Content Library and API tools. After multiple rounds of feedback with researchers and other stakeholders, we are now in a position to roll these tools out more broadly.

Our Meta Content Library and API tools provide access to near real-time public content from Pages, Posts, Groups and Events on Facebook, as well as from creator and business accounts on Instagram. Details about the content, such as the number of reactions, shares, comments and, for the first time, post view counts are also available. Researchers can search, explore and filter that content on both a graphical User Interface (UI) or through a programmatic API.

👏👏👏 Hopefully Threads soon, too.

Social Media Today by Andrew Hutchinson - Meta Highlights What’s Driving Threads Engagement - Various studies have shown that the content that best drives emotional response, particularly in regards to inspiring comments, is material that prompts high-arousal emotions, such as anger, fear, and happiness. If you can hit these emotional triggers, your content is much more likely to go viral, which is what social algorithms incidentally incentivize by feeding into engagement.

Meta doesn’t want Threads to go down that path, which could also be an element to consider, in that Meta could be actively looking to lessen the impact of certain elements in order to encourage a different type of in-stream engagement.

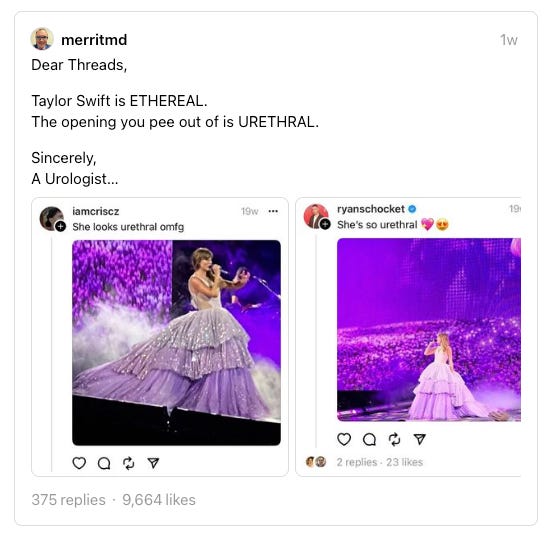

I’ve been really impressed with Threads. It’s the social channel I’m personally spending the most time at. The algorithm does appear to favor engagement but not negative emotional triggers. I can find most of the types of accounts I want content from there (sports is still a little lacking yet).

It is quickly becoming the news/politics Twitter alternative but with the tools to deal with trolls and keep the place relatively civil. President Biden, the First Lady, Vice President Harris and the Second Gentleman have recently joined, as has the White House.

If you’re using Threads, say hi! I’m @deerickson.

Business Insider by Swadah Bhaimiya - An agency created an AI model who earns up to $11,000 a month because it was tired of influencers 'who have egos' - A Spanish modeling agency said it's created the country's first AI influencer, who can earn up to 10,000 euros, or $11,000, a month as a model.

Euronews reported the news, based on an interview with Rubeñ Cruz, founder of the Barcelona-based modeling agency The Clueless, which created the influencer.

The AI-generated woman, Aitana López, is a pink-haired 25-year-old. Her account has amassed 124,000 followers on Instagram.

Cruz told Euronews he decided to design López after having trouble working with real models and influencers. "We started analyzing how we were working and realized that many projects were being put on hold or canceled due to problems beyond our control. Often it was the fault of the influencer or model and not due to design issues," he said.

I give you muy post from May:

Influencer AI

The Trend Toward Virtual Personas Twelve years ago, my buddy Pat Lilja and I discussed the popularity of a Japanese virtual pop star called Hatsune Miku. At the time, this J-Pop phenom was filling arenas in Los Angelese with…

Music Monday

In case you missed it, here’s The Beatles newest song, Now and Then. It was made possible due to technology developed by director Peter Jackson during his production of The Beatles documentary, Get Back, that enabled the separation of John Lennon’s voice from the piano of a 1970s-era demo recorded on a cassette tape in Lennon’s home.

Threads

Stealing an idea from Casey Newton.