Opinion Polling Literacy

Lies, damn lies, and statistics. How to read political polls.

Nearly ten years ago the agency I was with had just obtained the results of a public opinion poll we had commissioned on behalf of a client in order to gain some consumer insights into the client’s services.

The job of interpreting the data was assigned to an account manager with about seven years experience in public relations.

When I got the results of her interpretation of the data, it quickly became apparent that she didn’t know how to read polling results.

This is no criticism of her abilities; she’d probably never been asked to interpret a poll before. And public relations professionals are typically language people, not numbers people. You can count me as Exhibit A: I got a BA in English.

Nevertheless, I was a bit shocked when it dawned on me that she didn’t understand polling. One of the primary things we strategic communicators do to keep our proverbial ear to the ground is to pay attention to public opinion polling so as to understand consumer sentiment.

What gave it away for me was that her analysis included conclusions on questions for which the data was within the margin of error.

I’ve been thinking a lot about that experience now that we are being bombarded with political polling results heading into the 2024 presidential election.

If someone who reasonably should have known how to read polls did not, can we reasonably expect the general public’s polling literacy to be any better? And what does that mean for an ostensibly well-informed electorate as we approach an election where the very survival of democracy is the primary choice to be made?

A Public Issues Reputation Algorithm

Political and policy polling is essentially a crude algorithm designed to divine the reputation of policy issues and political leaders among the electorate. But there are many problems with this particular “algorithm.”

The Many Misuses Of Political Polling

If you pay any attention whatsoever to online conversations about politics, you will have no doubt come across discussions about the failings of politcal polling by media organizations.

I spent many years publishing a website, a newsletter, and a podcast that tracked public opinion polling of all varieties, so I do not dismiss opinion polling out of hand. In fact, one of my first jobs out of college was with a public opinion research firm.

The industry has always had it’s challenges. But because the stakes are so very high when it comes to politics and public policy, political polling deserves a lot more scrutiny and criticism than consumer-related polling.

Since polling results can have a very real effect on shaping the public’s opinion, this year it is absolutely crucial for the public to question and accurately interpret the data behind the headlines.

Let me touch briefly on a few of the common criticisms leveled at political polling and then we’ll discuss real-life examples.

Sensationalism & Bias

There's a perception that media polls might be biased or sensationalized to attract viewers or readers, potentially influencing how questions are framed and results are reported.

Overemphasis On Horse-Race Journalism

Media polls often focus on the "horse race" aspect of politics (who's winning, who's losing) rather than substantive policy issues, which can skew public perception and discourse.

Sampling Issues

Similar to general polling criticisms, media polls can suffer from non-representative samples, leading to inaccurate portrayals of public opinion.

Effect On Public Opinion

Media polls can create a bandwagon effect, where the public's perception of a candidate or issue is influenced by poll results, potentially affecting election outcomes.

Lack Of Transparency

There's often criticism over the lack of transparency in the methodology used by media organizations, such as sample size, margin of error, and sampling technique.

Frequency & Timing

The frequency and timing of media polls can contribute to fluctuations in public opinion, especially close to elections, leading to accusations of influencing rather than measuring public sentiment.

Conflict Of Interest

Media organizations may have inherent conflicts of interest, especially if they have political affiliations or leanings, raising questions about the objectivity of their polls.

Limited Scope & Depth

Media polls might focus on a narrow range of issues or lack depth in their questioning, failing to capture the complexities of public opinion.

Interpretation & Presentation

How media outlets interpret and present poll results can sometimes be misleading, emphasizing certain aspects for dramatic or narrative purposes.

November 2023 New York Times Poll

The New York Times/Siena College poll released on November 5, 2023 is a superb example that helps to illustrate many of these criticisms. Let’s go through them category by category:

This is the headline and subhead of the Times article about the poll:

Trump Leads in 5 Critical States as Voters Blast Biden, Times/Siena Poll Finds

Voters in battleground states said they trusted Donald J. Trump over President Biden on the economy, foreign policy and immigration, as Mr. Biden’s multiracial base shows signs of fraying.

Buried at the bottom of the article—where media polling methodology is always buried—was this line: “The margin of sampling error for each state is between 4.4 and 4.8 percentage points.”

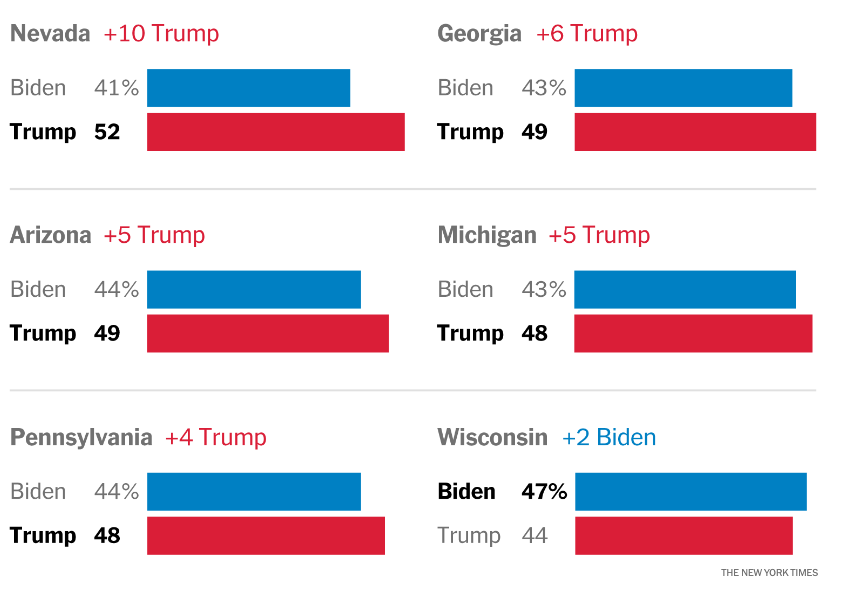

Here are the results for the six battleground states surveyed by the Times.

According to the Times’ margin of error, these results could be either 4.4 to 4.8 points larger or 4.4 to 4.8 percentage points smaller for the state results. Now, let’s recalculate the results by giving Biden 4.8 percentage points:

Nevada:

Biden 45.8%

Trump 47.2%

Georgia:

Biden 47.8%

Trump 44.2%

Arizona:

Biden 48.8%

Trump 44.2%

Michigan:

Biden 47.8

Trump 43.2%

Pennsylvania:

Biden 48.8%

Trump 43.2%

Wisconsin:

Biden 51.8%

Trump 39.2%

We can recalcuate in the opposite direction, of course, and it would look a lot different for Biden. This is a simple example but it gives you an idea of how margin of error can change your interpretation of polling results.

Typically, polls with larger sample sizes have smaller margins of error. The smaller the margin of error, the more confidence you are supposed to have in the poll results.

The total sample size for the Times poll was 3,662 across all six states. That means roughly 610 registered voters are supposed to be representative for the entire population of each state.

Furthermore, this post from The Cascadia Advocate points out that the poll oversampled Republican voters, a fact that went unreported in both the New York Times story about the poll as well as the mass coverage of the poll by other media outlets.

The article notes:

The crosstabs for these polls show that 75% of the respondents identify as very conservative, somewhat conservative, or “moderate” (which isn’t an ideology):

QUESTION: Do you consider yourself politically liberal, moderate, or conservative?

FOLLOW UP: (If liberal or conservative) Is that very or somewhat?

ANSWERS [ALL STATES]:

Very liberal: 10%

Somewhat liberal: 10%

Moderate: 39%

Somewhat conservative: 19%

Very conservative: 17%

Don’t know/Refused: 5%

So, taking into consideration the large margin of error, the small sample size, and the oversampling of Republican votes, this poll doesn’t really tell us much at all about the electorate in those states.

Let’s pause for a moment and evaluate what we’ve learned so far with regard to our categories of political polling misuse:

✅ Sensationalism? Check. The Times’ headline blares “Trump Leads in 5 Critical States as Voters Blast Biden” yet the poll’s own data hardly supports that premise.

✅ Bias? Check. See above.

✅ Overemphasis on Horse Race Journalism? Check. This poll is all about the horse race.

✅ Sampling Issues? Check. Small sample size. Large margin of error. Oversampling of Republicans.

✅ Lack of Transparency? Check. Burying basic methodological information at the bottom of the article ensures most people will not see it because people rarely read an entire article. But all media outlets do that, not just the Times. What is inexcusable is not divulging within the article the oversampling of Republicans but then that would dillute the claims in the headline, wouldn’t it?

✅ Interpretation & Presentation? Check. See Lack of Transparency regarding the lack of discussion about oversampling Republicans.

The Times As A Viral Engine

It is particularly troubling when the New York Times releases polling results that can reasonably be interpreted as suspect, especially when those results are promoted with a sensationalist headline that doesn’t accurately reflect the actual data.

Sensationalist headlines are, of course, clickbait journalism and the New York Times is not alone in using such tactics to drive website traffic.

But, as “the paper of record,” the New York Times ostensibly holds itself to a higher standard. And because of this perceived esteem, when the Times leads, other media outlets invariably follow, from broadcast to print to digital.

And that dynamic creates a viral effect. This screenshot of Google Search results about the poll illustrate how everyone follow the Times’ lead:

A lot of people will only have read the headline of the poll as they scroll through their feed or see the notification on their phone, so that is the only understanding of the poll they will ever get.

Additionally, broadcast media coverage of the results was hyperbolic and lacked any real examination of the actual data, taking the headlines as fact.

For low-information Democrats, such headlines are likely demoralizing. Demoralized voters are less likely to be motivated to vote, if they believe their vote won’t matter.

Which brings us to the next point about this poll: The timing of its release. The poll results were published on November 5, on the eve of elections in Virginia, Kentucky and Ohio.

On November 6, the Governor of Kentucky was up for re-election, control of Virginia’s legislature was at stake, and Ohioans were to decide whether or not to enshrine abortion rights in that state’s constitution.

While the Times poll did not survey voters in any of these states, it is plausible that some voters in those states could have felt demoralized and not shown up to the polls as are result.

There is no way to measure whether that dynamic actually occurred and the Democratic victories in each of those states would suggest not. But more importantly, those victories are actual data that contradicts the Times’ suggestion of Republican strength in battleground states.

✅ Timing? Releasing political polling results promoted by sensational and misleading headlines the day before important elections in swing states was irresponsible.

✅ Effect on Public Opinion? If you had done any social listening on November 5, you could not have avoided seeing the collective freakout about Trump’s alleged lead over Biden.

Political Polling’s Dodgy History

Look, this is one poll but it is emblematic of many of the problems with political polling. There is a long and sorry history of political polling getting it disasterously wrong. The Conversation gives us a brief history:

1936 Literary Digest poll predicted Republican Alf Landon would easily defeat President Roosevelt.

In 1948, pollster Elmo Roper stopped releasing survey results two months before the election, confident Dewey’s lead in the polls would last. That, of course, resulted in the iconic photo of President Truman holding a copy of the Chicago Daily Tribune featuring the headline “Dewey Defeats Truman.”

In 1960, the Roper poll predicted a two point Nixon victory.

In 1980, polls predicted a close race between President Carter and Ronald Reagan. Reagan won by 10 percentage points.

In 2016, polls predicted Hillary Clinton would win in the battleground states of Wisconsin, Pennsylvania, and Michigan.

In 2020, polls overstated support for President Biden by nearly 4 percentage points.

In 2022, Gallup confidently predicted a red wave. Democrats vastly overperformed expectations. The same Siena polling outfit we just dissected said independent women were swinging to the GOP in the wake of the Dobbs decision. We know how that turned out.

Polling Results Are Not Facts

And yet despite these consistent failures, people still treat polling results as fact.

Why?

Because the political commentariat treats them that way.

When discussing polls themselves, reporters and pundits alike treat the results as a facts without addressing the nuances or limitations of the poll.

Reporters use poll results as data points to support the premise of other news coverage, such as how this segment did on Sunday covering President Biden’s visit to South Carolina.

Here’s the transcript:

As the Biden campaign co-chair, Clyburn’s also here combating a polling reality. The most recent NBC News survey, taken in November, showing 61% of Black voters would choose Biden over a Republican. But in 2020, our exit polls show Biden won 87% of the Black vote.

Let’s start with the phrase “polling reality,” which frames the numbers about to be cited as an indisputable fact when, as we have just seen, that is a dubious proposition at best.

Another red flag: “most recent NBC News survey, taken in November.” So that’s—let me count—one, two, about three months ago.

It is highly questionable that 61% figure remains the same today. Could be higher. Could be lower. As you’ve no doubt heard many times before, polls are a snapshot in time.

Lastly, NBC News is comparing apples to oranges. Survey results are different than exit polls. While both methodologies depend upon self-reporting, exit polls are far more likely to be accurate because it is simply actual voters reporting an action they’ve just taken, where surveys are speculations on the future by potential voters.

A better approach would be to look at the actual vote totals by African Americans in recent elections in, say, Kentucky, Virginia, and Ohio and compare the turnout to the most recent past elections in those states to see if the percentage of the Black vote has grown or shrunk in those states.

True, this is not a direct comparison of South Carolina voters but it would be more likely to indicate a trend among Black voters what that NBC segment attempted to show.

Media outlets and the pundits who fill broadcast segments have a vested interest in polling as a way to create “news,” generate interest, and drive ratings. The inclusion of poll results as a data point in support of reporting without the necessary qualifications is just laziness.

Misleading Political Polling Metrics

If you fully understand the methodology and have access to the wording of the questions, polls can be useful in interpreting public opinion.

They are most useful as a snapshot in time and as such, lose value over time.

Polls are useful over time when the same question is asked of respondents over and over again during the course of a year or years. These are known as tracking polls and are useful in understanding how sentiment can change over time.

But even tracking polls are fraught with challenges.

Right Track/Wrong Track

The right track/wrong track question is a staple of tracking polls but the question—and therefore the answers—are subject to interpretation.

The answer I give as a polling respondent depends entirely on how I interpret the question.

For exampe, I may think that the course President Biden has set the country on is the right course and that we are making progress but I may also believe that the country is on the wrong track because of the rise of a fascist movement in the country.

So, though I think the country is on the right track in terms of policy and the administation’s accomplishments, I may answer that the country is on the wrong track because I am alarmed at the rise of fascism.

If a lot people feel as I do but answer with a wrong track response, it is likely that journalists will iterpret those numbers as an indictment of Biden’s performance, when that would be further from the truth.

Approval Rating

Another misleading aspect of tracking polls is the approval rating question.

The same basic dynamic applies here. I may approve of all of the policies the Biden administration has put in place and appreciate Biden’s accomplishments but be frustrated that he’s not doing more an issue that is important to me and therefore express disapproval to the pollster.

That is in no way an indication that I don’t plan on voting for him. But that is how approval ratings are interpreted.

Both of these metrics—right track/wrong track and approval rating—can influence public sentiment. If I see big wrong track numbers and low approval ratings for Biden, I might feel I’m in the minority, be demoralized and therefore less motivated to vote.

Ultimately, the only polling that matters are the ballots that are cast in elections.

So take polls with the grains of salt they deserve, investigate the details of how they were conducted, use what insights that make sense, along with other, better, data like actual election results, to help you best interpret the political landscape.

To that end, here’s a breakdown to help you understand the elements that go into opinion polling. (This following section was written with the assistance of AI but edited and vetted by me).

The Anatomy & Art of Polling

The Foundation: Sampling

Constructing a sample is akin to painting a miniature portrait of society. The 2016 U.S. Presidential election, for instance, highlighted the pitfalls of underrepresenting rural voters. This underscores the importance of a sample that mirrors the population's diversity and complexity.

Sample Size

Sample size is crucial in public opinion polling for several reasons:

Accuracy

A larger sample size generally leads to more accurate results. It reduces the margin of error, meaning the poll's results are closer to the true opinion of the entire population.Representation

A sufficiently large sample is more likely to include diverse segments of the population, reflecting various demographics, political beliefs, and other characteristics. If the sample isn't truly representative, it can lead to biased results. For instance, over-representing a certain demographic group. Populations evolve, so keeping the sampling frame updated is a challenge. People might choose not to participate, creating another challenge that could lead to nonresponse bias.Confidence Level

The size of the sample directly affects the confidence level, which is the degree of certainty that the poll results represent the true opinion of the entire population. A larger sample size increases the confidence level.Statistical Significance

A larger sample size increases the likelihood that the poll's findings are statistically significant, meaning the results aren't due to random chance. This is especially important when trying to detect small differences in opinions or when dealing with a population that has diverse views.

Subgroup Analysis

A sufficiently large sample allows for reliable analysis of subgroups within the population. For instance, understanding the opinions of specific age groups, geographic regions, or socio-economic classes requires enough respondents from each subgroup to draw meaningful conclusions.

Reducing the Margin of Error

The margin of error in a poll decreases as the sample size increases. For example, a poll with 1,000 respondents might have a margin of error of around three percent, whereas a poll with 500 respondents might have a margin of error of around five percent. A smaller margin of error means the poll results are a closer approximation of the true opinion of the entire population.

Cost & Practicality Considerations

While larger samples provide more accuracy, there's also a practical limit based on cost and logistics. Larger samples mean more interviews, more data processing, and ultimately more time and money. Pollsters often have to balance the need for a large sample with the resources available. Sampling a smaller, manageable group is more economical and faster than surveying an entire population.

Law of Diminishing Returns

There's a point where increasing the sample size further provides little to no improvement in accuracy. For example, increasing a sample size from 1,000 to 2,000 reduces the margin of error, but not as dramatically as increasing from 100 to 1,000. Understanding this helps in allocating resources efficiently.

Weighting in Public Opinion Polling

Weighting is a statistical adjustment made to survey data to ensure that the sample accurately represents the population, especially when certain groups are over- or under-represented in the sample.

Purpose

Correcting Imbalances

If a sample has too many or too few respondents from a particular demographic group relative to the population, weighting adjusts for this.Enhancing Accuracy

By accounting for demographic discrepancies, weighting helps to produce more accurate results that reflect the true opinions of the overall population.

How It Works

Identifying Key Demographics

Pollsters identify important characteristics like age, gender, race, education, and geographic location.Comparison to Population Benchmarks

These sample demographics are compared to known benchmarks of the population, usually from reliable sources like census data.Assigning Weights: Each response is given a weight based on how representative it is of the population. For example, if young people are underrepresented in the sample, their responses might be given more weight.

Challenges

Selecting Weighting Variables

Choosing which variables to weight by is crucial and can significantly impact results.Over-Weighting

If a sample is very skewed, some responses might be overly weighted, which can introduce its own biases.Assumptions About Non-Respondents

Weighting often involves assumptions about the opinions of non-respondents, which can be risky.

Pros & Cons

Pros

Makes the best use of available data; can significantly improve the representativeness of the results.Cons

Adds complexity; if done incorrectly, it can introduce new biases.

Evolving Techniques

With changing survey modes and declining response rates, the methods and approaches to weighting are continually evolving. Pollsters and statisticians constantly refine weighting techniques to deal with new challenges in data collection.

Question Design: Crafting with Precision

The subtleties in how questions are phrased can significantly guide responses. Slight differences in wording can elicit varied opinions.

Importance of Word Choice

The wording of questions in a poll is critical because it can significantly influence how respondents understand and answer the questions. The goal is to craft questions that are clear, unbiased, and reflective of the respondent's true opinions.

Challenges

Avoiding Leading Questions

Questions must be neutral and not lead respondents to a particular answer.Clarity & Simplicity

Questions should be easy to understand, avoiding complex language or jargon that might confuse respondents.Avoiding Double-Barreled Questions

Questions should only ask about one thing at a time, not multiple issues in one question.Cultural Sensitivity

The wording needs to be appropriate and sensitive to different cultural contexts.

Impact

Response Bias

Poorly worded questions can lead to response bias, where the wording influences the respondent's answer.Misinterpretation

Ambiguous or confusing questions can result in respondents interpreting the question in different ways, leading to unreliable data.

Best Practices

Pre-Testing

Conducting preliminary tests of the survey on a small scale to identify potential issues in question wording.Review & Revision

Having experts or peers review the questions to ensure clarity and neutrality.Consistency

Using similar wording in questions when tracking opinions over time to ensure comparability.

Examples

Bad Wording

"Do you agree with the unnecessary and harmful restrictions on gun ownership?"Improved Wording

"Do you support or oppose restrictions on gun ownership?"

Mode Of Survey

The mode of survey refers to the method used to conduct the poll. Common modes include telephone interviews, online surveys, face-to-face interviews, and mail surveys.

Different Modes

Telephone Interviews

These can be landline or mobile phone surveys. They were once the gold standard but have faced challenges like declining response rates and the shift from landlines to mobile phones.Online Surveys

Increasingly popular due to their cost-effectiveness and speed. They rely on internet access, which can skew towards certain demographic groups.Face-to-Face Interviews

These can provide more in-depth data and higher response rates but are more time-consuming and costly.Mail Surveys

Less common now, but useful in certain contexts, like reaching populations without reliable internet or phone access.

Pros & Cons

Telephone Interviews

Pros: Can reach a wide demographic, including older populations.

Cons: Lower response rates; mobile phone prevalence makes it harder to create a representative sample.

Online Surveys

Pros: Quick and cost-effective; allows for innovative question formats.

Cons: Internet access bias; may exclude less tech-savvy populations.

Face-to-Face Interviews

Pros: Deeper engagement; can clarify misunderstandings.

Cons: Expensive and time-consuming; interviewer bias can be a factor.

Mail Surveys

Pros: Accessible to those without internet or phone; perceived as less intrusive.

Cons: Slow response time; lower overall response rates.

Challenges

Representativeness

Each mode has biases in who is likely to respond, affecting how representative the sample is of the overall population.Technological Changes

Rapid tech changes affect how people communicate, influencing the effectiveness of different survey modes.Response Rates

All modes face challenges with declining response rates, affecting the quality of data collected.

Adapting to Change

Pollsters often combine different modes to balance out the biases and limitations of each. For instance, mixing online and telephone methods to get a broader reach.

Response Rate

The response rate is the percentage of people contacted who actually complete the survey. It's a crucial metric for assessing the quality of a poll.

Importance

Indicator of Quality

A higher response rate typically indicates a more reliable and accurate poll, as it suggests a better representation of the target population.Bias Reduction

Higher response rates reduce the risk of nonresponse bias, where the opinions of non-respondents might differ significantly from those who participated.

Challenges

Declining Rates

Response rates have been declining globally, partly due to survey fatigue and concerns about privacy.Reaching Diverse Groups

Certain demographics are harder to reach, potentially leading to under-representation of these groups in the survey results.

Strategies to Improve Response Rates

Multiple Contact Methods

Using various methods (phone, email, mail) to reach potential respondents.Incentives

Offering small incentives can encourage participation.Follow-ups

Sending reminders or follow-up requests to non-respondents.Simplifying Surveys

Making surveys shorter and easier to complete can improve response rates.

Measuring Response Rate

Calculation

It's calculated by dividing the number of completed surveys by the total number of contacts attempted.Reporting

Transparently reporting response rates is essential for assessing the poll's credibility.

Impact On Polling Results

Low Response Rates

Can lead to biased results if non-respondents differ significantly from respondents.Overestimation of Precision

Low response rates can give a false sense of precision if the sample isn't representative of the broader population.

Adjustments For Low Response Rates

Weighting

Pollsters often use statistical techniques to adjust results to compensate for under-represented groups.Benchmarking

Comparing results to known benchmarks or other reliable data sources to assess accuracy.

Analysis & Interpretation in Polling:

Analysis and interpretation involve examining the collected survey data to draw meaningful conclusions and insights. This stage turns raw data into understandable information about public opinion.

Key Aspects

Data Cleaning

Initially, the data is cleaned to remove any errors or inconsistencies, like incomplete responses or outliers.Statistical Analysis

Pollsters use various statistical methods to analyze the data, such as calculating averages, percentages, and cross-tabulations to explore relationships between variables.Understanding Margin of Error

Recognizing the inherent uncertainty in any poll. The margin of error indicates the range within which the true value for the whole population is likely to fall.Trend Analysis

Comparing current results with previous polls to identify trends or shifts in public opinion.Subgroup Analysis

Looking at how different demographic groups (age, race, gender, etc.) responded, which can reveal more nuanced insights.

Challenges

Avoiding Misinterpretation

Data can sometimes be interpreted in ways that are misleading or ignore the complexity of the underlying opinions.Contextualizing Results

Understanding the broader social, political, or economic context is crucial for accurate interpretation.Dealing with Ambiguity

Some survey responses might be ambiguous or open to multiple interpretations.

Importance of Transparency

Transparent reporting of methodology, data analysis, and interpretation is essential for credibility. This includes disclosing the margin of error, sample size, and how the sample was obtained.

Bias & Objectivity

Analysts must be aware of their own biases and strive for objectivity. The phrasing of conclusions and the presentation of data should be as neutral and unbiased as possible.

Communicating Results

Effectively communicating the findings to the public, stakeholders, or clients in an understandable and responsible manner is a key part of the process. This often involves simplifying complex statistical information without losing its accuracy.

Understanding The Margin Of Error

The margin of error in polling is a measure that represents the degree of uncertainty or potential error in the results of a survey. It's a crucial concept for understanding how closely the poll's findings might match the true feelings or behaviors of the entire population. Here's how it works:

Interpretation

Range of Results

If a poll shows that 50% of respondents favor a candidate with a margin of error of ±3%, it means the actual proportion in the entire population could be as low as 47% or as high as 53%.Not a Measure of Accuracy

The margin of error does not directly measure the accuracy of the poll's results. It only gives an estimate of the possible range of error in the sample.

Factors Affecting Margin of Error

Sample Size

Larger samples reduce the margin of error, but the relationship is not linear. Doubling the sample size doesn't halve the margin of error.Population Variability

If the population has a lot of variability, the margin of error may be larger.Survey Methodology

Different sampling methods can affect the margin of error, such as stratified sampling or cluster sampling.

Limitations

Applies to Random Sampling

The margin of error concept assumes a probability sampling method. It may not apply to non-random samples.Doesn’t Account for All Errors

It doesn't cover errors like biased question wording, survey mode effects, or non-response bias.

Understanding Crosstabs

Crosstabs are tables that show the relationship between two or more survey questions or variables. They display how different responses to one question vary in relation to responses to another question.

Usage in Polling

Identifying Patterns and Relationships

Crosstabs help in discovering patterns or relationships between different variables. For example, how opinions on a policy issue vary by age group, gender, or education level.

Segmenting Data

They allow pollsters to break down overall survey results into segments based on demographics or other characteristics. This segmentation helps in understanding the specific opinions of different groups within the population.

Hypothesis Testing

Crosstabs are used to test hypotheses about relationships between variables. For instance, testing if there’s a significant difference in political preferences between different income groups.

Insight Into Voter Behavior

In political polling, crosstabs are crucial for understanding voter behavior, such as which candidate is favored by different demographic groups.

Tailoring Communication

Results from crosstabs can guide campaigns, organizations, or businesses in tailoring their communication or strategies to specific demographic or interest groups.

Creating Crosstabs

Selecting Variables

The first step is to choose the variables to cross-tabulate. These could be any two or more questions from the survey.Building the Table

The responses to these variables are then laid out in a table format, showing the intersection of responses.

Interpreting Crosstabs

Analyzing Proportions

Pollsters look at the proportion of respondents in each category or intersection of categories.Identifying Trends

Key trends or deviations from expected patterns are identified and analyzed.

Challenges

Over-Interpretation

There's a risk of over-interpreting small differences or patterns that might not be statistically significant.Sample Size Issues

For some subgroups, the sample size might be too small to draw reliable conclusions.

Importance In Public Opinion Research

Crosstabs are essential in public opinion polling as they provide a deeper understanding of how different groups within the population think and feel about various issues. They add nuance to the interpretation of survey data, allowing for a more detailed and segmented analysis of public opinion.

Trend Analysis In Polling

Trend analysis in polling refers to the process of comparing survey results over time to identify patterns, shifts, or changes in public opinion.

The true strength of polls lies in tracking trends over time. The gradual shift in public opinion on same-sex marriage in the U.S., as tracked by Pew Research, exemplifies the insights gained from observing long-term trends.

Purpose

Tracking Changes

It helps in understanding how public opinion on certain issues evolves over time.Identifying Patterns

Trends can reveal recurring patterns, such as seasonal fluctuations in opinions or attitudes.

How It's Conducted

Consistent Measurement

To accurately track trends, the same questions need to be asked in the same way over different time periods.Regular Intervals

Polls are conducted at regular intervals (monthly, annually, etc.) to maintain a consistent timeline for comparison.Graphical Representation

Often, results are plotted on graphs or charts to visually depict changes over time.

Importance

Contextual Understanding

It provides context for current opinions by showing how they've changed from the past.Policy & Decision-Making

For policymakers and businesses, understanding trends is crucial for making informed decisions.

Challenges

Changes in Methodology

If the survey method changes (e.g., from phone to online), it can affect the comparability of results over time.Interpreting Causality

Just because two trends occur simultaneously doesn't necessarily mean one caused the other.Respondent Changes

The demographic composition of the population can change over time, affecting trend analysis.

Applications

Political Polling

Tracking public opinion on political figures, parties, or issues over an election cycle.Market Research

Understanding changing consumer preferences or market trends.

Considerations

Longitudinal vs. Cross-Sectional Data

Trend analysis usually relies on cross-sectional data (different respondents at each time point). Longitudinal data (same respondents over time) can provide deeper insights but is harder to obtain.Statistical Significance

Ensuring that observed changes are statistically significant and not just due to random variation.

Adapting To Polling Challenges

Pollsters modify their techniques and strategies in response to evolving societal, technological, and demographic trends that affect data collection and analysis in public opinion research.

Key Drivers Of Change

Technological Evolution

Adapting to new communication methods, like social media, mobile devices, and online platforms.

Incorporating digital data collection and analysis tools.

Changing Communication Habits

Addressing the decline in traditional phone response rates and the rise of internet-based communication.

Finding ways to reach populations that are less accessible through conventional methods.

Demographic Shifts

Keeping pace with changes in population dynamics, such as aging populations or increasing diversity.

Adjusting sampling methods to ensure representative coverage of all demographic segments.

Methods Of Adaptation

Innovative Sampling Techniques

Developing and testing new sampling methods to improve representativeness and response rates.

Exploring targeted sampling and stratified approaches to better capture diverse opinions.

Advanced Data Analysis

Utilizing big data analytics and machine learning algorithms for deeper insights and predictive modeling.

Adapting statistical techniques to handle the complexities of modern datasets.

Enhanced Engagement Strategies

Employing interactive and engaging survey designs to increase participation.

Customizing outreach and communication to resonate with different demographic groups.

Challenges

Rapid Pace of Change

Keeping up with fast-evolving technological and societal trends can be challenging.Maintaining Accuracy & Consistency

Ensuring that new methods preserve the accuracy and reliability of data over time.Resource Allocation

Innovating within the constraints of available resources and budgets.

Importance

Ensuring Relevance

Continuously updating methodologies is crucial for maintaining the relevance and effectiveness of polling in a changing world.Accurate Representation

Adapting methods to accurately reflect the views of an evolving population.

Push Polling

Push polling is a controversial and often criticized practice in the field of opinion polling and political campaigning. It is not a genuine polling technique but rather a political campaign tactic. It's disguised as a regular opinion poll but is actually intended to influence voters under the guise of conducting a survey. Here's a breakdown of what it involves:

Characteristics

Leading Questions

The questions are designed to "push" respondents towards a specific viewpoint or opinion, often by providing negative or misleading information about a candidate or issue.Manipulative

The primary aim is to shape opinions rather than measure them. The questions might include false or exaggerated claims to sway respondents' views.Lack Of Demographic Data Collection

Unlike legitimate polls, push polls often don't collect detailed demographic data from respondents.

Example

A push poll might ask a question like, "Would you be more or less likely to vote for Candidate X if you knew they were being investigated for financial fraud?" regardless of whether the allegation is true.

Criticisms

Misleading and Unethical

Push polls are widely criticized for being deceptive and unethical, as they spread potentially false information under the pretense of legitimate polling.Damaging To Genuine Polling

They can erode public trust in legitimate opinion polling and political processes.Lack Of Transparency

Push polls often do not disclose their sponsor or intention, misleading respondents about the nature of the survey.

Legal & Ethical Considerations

In some jurisdictions, there are legal restrictions or guidelines against push polling. Ethically, it's considered a malpractice in political campaigning and opinion research.

Sources & Resources

How to Lie With Statistics by Darrell Huff - A classic. I was assigned this book my first year in college and I’ve referred back to it ever since.