LinkedIn's new AI Article Collaborations

What reputational factors are at work in LinkedIn's AI collaborations?

The speed with which generative AI is being adopted has been pretty breathtaking.

Just this week, I got an offer from Google Ads to automatically generate headlines and ad copy for me from an initial text input. And if you hadn’t noticed, I’ve become obsessed with Midjourney (I’ll get into that in a separate post). But I was also invited by LinkedIn to collaborate on an AI generated article.

And it is that last item we’ll discuss today.

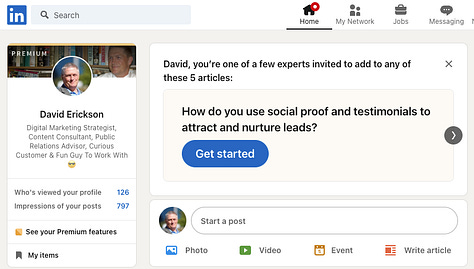

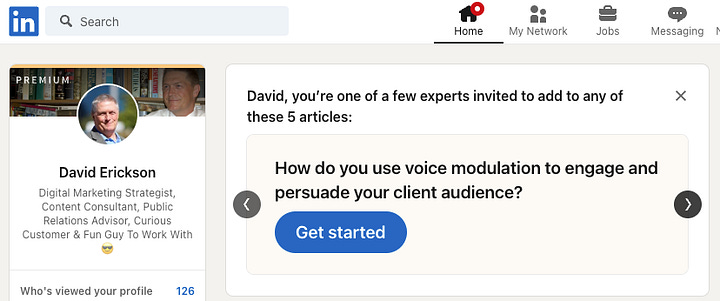

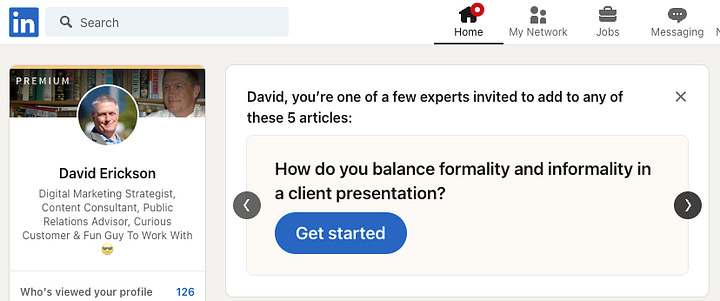

Upon visiting the LinkedIn home page, I was presented with an invitation to contribute to five AI-generated articles:

Alright, I’m game. I’m curious about how this will work. I chose the article asking “How do you balance formality and informality in a client presentation?”

I click on the Get Started button and get taken to the article:

LinkedIn explains that the article has been AI-generated and is inviting LinkedIn members, based on what it knows about their experience and expertise, to contribute to the article.

After each paragraph, you have the option to “Add your perspective:”

Click on the plus icon, and you can enter your perspective:

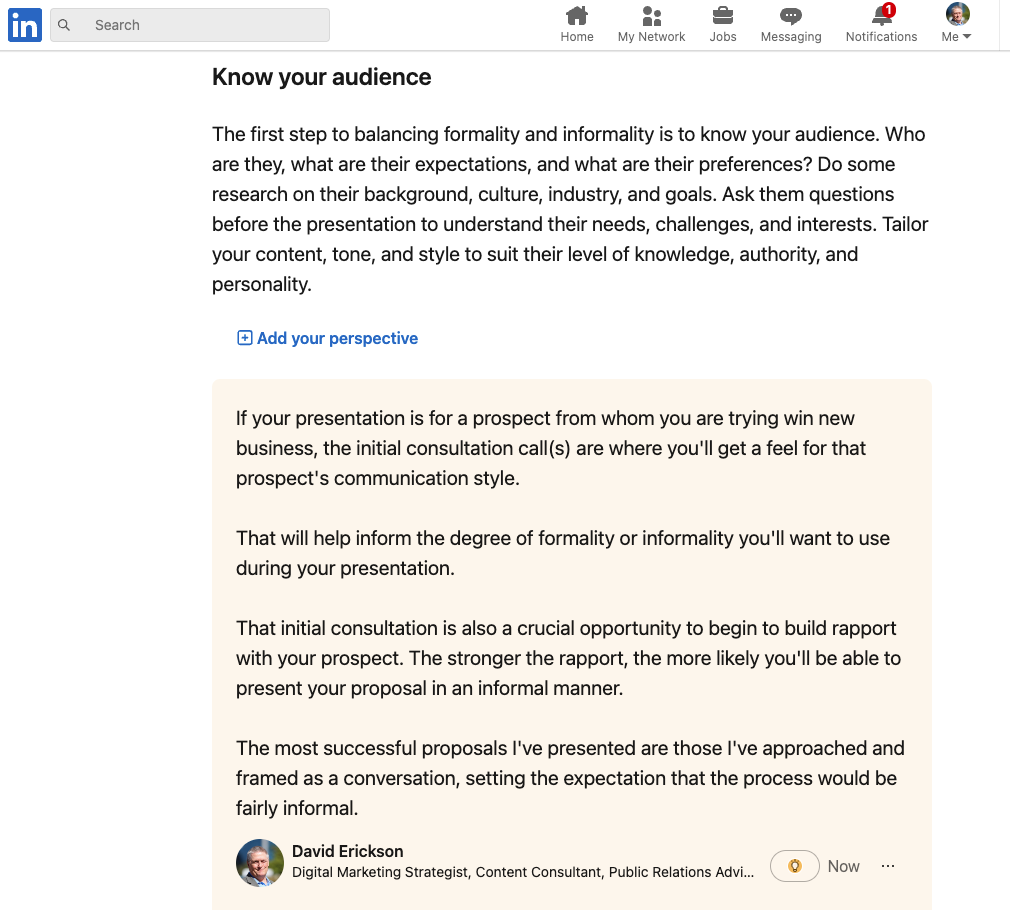

Click add, and your comments are added to the article:

As you can see, my LinkedIn profile is attached to the comment. And as the author of the comment, I have the ability to edit or delete it or copy a link to my contribution:

As you can also see, readers have the ability to Upvote a comment as insightful. They can also Report the comment and copy a link to it:

Paid Subscribers: For the next segment of Chapter 1, we’ll examine the reputational aspects of the common URL.

The AI Collaborative Experience

Before we get to the reputational aspects of this new LinkedIn experiment, a word about the experience of collaborating with AI using this format.

First, I was highly motivated to try this out simply as a result of professional curiosity. Absent that curiosity, I’m not sure I would’ve completed the process of collaborating on an AI-generated article.

It took me some time—much longer than it typically would—to think of what to say in response to one of the paragraphs in these articles. And it wasn’t because I had no thoughts at the ready on these topics.

I just wasn’t inspired to respond as I usually am on topics of which I’ve accumulated a fairly substantial body of knowledge. I think it’s because of the nature of the language generative AI like ChatGPT creates.

More often than not, the style of language AI-generated text responses is the equivalent of monotone copy. Accurate, explanatory but hardly entertaining or compelling.

That’s how I felt reading these AI-generated LinkedIn articles. I used ChatGPT to generate some technical explanations for the last segment of Chapter 1. It took me a while to adapt the language to my voice because the base text wasn’t inspiring; it wasn’t motivating.

I’ve noticed this monotone quality with other generative AI tools I’ve used. (I’ll plan on expanding on that topic in a subsequent post.)

The Reputational Factors Of LinkedIn’s AI Article Collaborations

My first question is, why did LinkedIn invite me to collaborate on these articles? Presumably, LinkedIn’s algorithm is serving up these invitations to people who have demonstrated a reputation for expertise on these topics through their profile contents or through their activity within LinkedIn.

These were the five AI-generated article choices LinkedIn gave me:

How do you use social proof and testimonials to attract and nurture leads?

How do you balance formality and informality in a client presentation?

How do you use voice modulation to engage and persuade your client audience?

How do you deal with voice fatigue or strain after multiple client presentations?

What are the common pitfalls and mistakes to avoid when crafting a product narrative?

I can understand why LinkedIn would decide I would have experience and expertise for answering the first question about social proof. Much of my LinkedIn profile has to do with social media and my current title at Tunheim is Director of Integrated Social and Digital Media.

The client presentation questions are a bit of a stretch. I don’t specifically discuss that topic on my profile but I do include links to earned media in which I discuss public speaking:

Top 25 Public Speaking Tips the Pros Use to Captivate Audiences

David Erickson Interview: Digital Marketing Meets Public Speaking

I can’t seem to find a plausible explanation for the last one about product narrative.

There is some evidence that my affinity for the topics LinkedIn is asking me to address is the basis for their collaboration recommendations. But it might also simply be that I tend to share a lot of articles about AI and as a result, I might be more likely than the average LinkedIn user to collaborate on an AI-generated article.

We can see, then, that there are likely some signals I’m generating that indicate my reputation on LinkedIn include certain areas of domain expertise.

But what about the reputational signals my participation in this collaboration generates? Here are some potential signals:

Curiosity in AI

Authority on the subject for which I added article content

Veracity of that content, pointing to either subject matter expertise or incompetence

Third party validation of such content through the Upvoting feature, or

Third party vetting of such content through the Reporting feature

Dwell time on my comment compared to the rest of the article

Clicks on my profile link attached to my comment

In-bound link creation through the Copy link feature

Association with other human collaborators, who may have the same topical expertise as I do

What I’m Reading

New York Times - Drug companies cannot sell people new medicines without first subjecting their products to rigorous safety checks. Biotech labs cannot release new viruses into the public sphere in order to impress shareholders with their wizardry. Likewise, A.I. systems with the power of GPT-4 and beyond should not be entangled with the lives of billions of people at a pace faster than cultures can safely absorb them. A race to dominate the market should not set the speed of deploying humanity’s most consequential technology. We should move at whatever speed enables us to get this right…What would it mean for humans to live in a world where a large percentage of stories, melodies, images, laws, policies and tools are shaped by nonhuman intelligence, which knows how to exploit with superhuman efficiency the weaknesses, biases and addictions of the human mind — while knowing how to form intimate relationships with human beings? In games like chess, no human can hope to beat a computer. What happens when the same thing occurs in art, politics or religion? Read the full article.

There is a lot of technopanic taking place over the development of artificial intelligence but if social media has taught us anything, there are also profound reasons for caution.

Gates Notes - In my lifetime, I’ve seen two demonstrations of technology that struck me as revolutionary. The first time was in 1980, when I was introduced to a graphical user interface—the forerunner of every modern operating system, including Windows…The second big surprise came just last year. I’d been meeting with the team from OpenAI since 2016 and was impressed by their steady progress…The development of AI is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other. Entire industries will reorient around it. Businesses will distinguish themselves by how well they use it. Philanthropy is my full-time job these days, and I’ve been thinking a lot about how—in addition to helping people be more productive—AI can reduce some of the world’s worst inequities. Read the full article.

This is worth the full read, as Bill Gates reviews some of the opportunities and some of the challenges posed by AI. He argues that AI developers, government and philanthropy should be focused on ensuring the benefits of AI are shared by all. That assumes good will on the part of all parties concerned. What is not addressed, and what needs to be addressed, is how to deal with adversarial actors such as authoritarian states of non-state terrorists who seek to destablize society, to sow chaos that can then be exploited for ideological or financial gain.

MIT Technology Review - …while companies and executives see a clear chance to cash in, the likely impact of the technology on workers and the economy on the whole is far less obvious. Despite their limitations—chief among of them their propensity for making stuff up—ChatGPT and other recently released generative AI models hold the promise of automating all sorts of tasks that were previously thought to be solely in the realm of human creativity and reasoning, from writing to creating graphics to summarizing and analyzing data. That has left economists unsure how jobs and overall productivity might be affected. For all the amazing advances in AI and other digital tools over the last decade, their record in improving prosperity and spurring widespread economic growth is discouraging. Although a few investors and entrepreneurs have become very rich, most people haven’t benefited. Some have even been automated out of their jobs. [Emphasis mine.] Read the full article.

This is crucially important. Society already suffers from vast wealth disparities. It’s untenable to continue travelling down that road, especially if generative AI supercharges that gap.

The Algorithmic Bridge - If people (AI researchers in particular) are disappointed and angry at OpenAI (as I write this I can’t help but realize that the name is indeed a hilarious joke) it isn’t because of the change itself—no one would dispute a private company’s decision to choose profits (i.e., survival) over altruistic cooperation. The reason for the widespread discontent is that OpenAI was special. They promised the world they were different: A non-profit AI lab with a strong focus on open source and untethered from the self-interested clutches of shareholders was unheard of and the main reason the startup initially amassed so many supporters. Read the full article.

Ye old bait and switch.

And also The Algorithmic Bridge - I’m not talking about AI becoming smarter than us (AGI, ASI, whatever). I’m not sure that’s possible. It’s not important. Because sooner than that (we won’t know how much), these things we’re building will grow so complex that not even our privileged minds will be able to make sense of them. It’s already happening. Read the full article.

So much for my championing of the idea of a National Algorithmic Safety Board.

New Atlas - Stanford's Alpaca AI performs similarly to the astonishing ChatGPT on many tasks – but it's built on an open-source language model and cost less than US$600 to train up. It seems these godlike AIs are already frighteningly cheap and easy to replicate. Read the full article.

Well that, at least, will help to level the playing field for startups and innovators, leaving the future of humanity not just up to the OpenAIs, Googles and Microsofts of the world.

TechCrunch - OpenAI today launched plugins for ChatGPT, which extend the bot’s functionality by granting it access to third-party knowledge sources and databases, including the web. Available in alpha to ChatGPT users and developers on the waitlist, OpenAI says that it’ll initially prioritize a small number of developers and subscribers to its premium ChatGPT Plus plan before rolling out larger-scale and API access. Read the full article.

Plugins will exponentially expand the capabilities of ChatGPT.

New York Times - The technology’s ability to create content that hews to predetermined ideological points of view, or presses disinformation, highlights a danger that some tech executives have begun to acknowledge: that an informational cacophony could emerge from competing chatbots with different versions of reality, undermining the viability of artificial intelligence as a tool in everyday life and further eroding trust in society…some of ChatGPT’s critics have called for creating their own chatbots or other tools that reflect their values instead. Read the full article.

What could possibly go wrong?

The Verge - Google is opening up limited access to Bard, its ChatGPT rival, a major step in the company’s attempt to reclaim what many see as lost ground in a new race to deploy AI. Bard will be initially available to select users in the US and UK, with users able to join a waitlist at bard.google.com, though Google says the roll-out will be slow and has offered no date for full public access. Read the full article.

I just got access to both Bard and Bing Chat. Give me a week or two to play around with them and I’ll report back.

Platformer - Today, Bard doesn’t connect to all the many things Google knows about you: your location history, your writing style, your documents, and so on. But someday it will, and on that day Bard will feel much more powerful. You can do a lot of amazing things by training a large language model on the web, but training a model on your personal data seems to me — at least in the abstract — as potentially even more powerful. Read the full article.

This idea feels like the beginning of the ability to build a large language model of yourself. This is the beginning of digital clones.

Garbage Day - This week, though, Twitter announced a couple curious things that I do think provide some fun insights into how grim things are getting over there. First, we finally have an actual date for the “winding down” of of the “legacy verified program”. It’s April 1st. Because there is literally no one with any common sense left at that company. Oh, even funnier, if an institution wants to be verified it will cost $1,000 a month! Plus an additional $50 for each account underneath that organization. lol sorry, but are you out of your fucking mind??? Read the full article.

The April Fools are the ones who will shell out for this shit.

New York Times - Gordon E. Moore, a co-founder and former chairman of Intel Corporation, the California semiconductor chip maker that helped give Silicon Valley its name, achieving the kind of industrial dominance once held by the giant American railroad or steel companies of another age, died on Friday at his home in Hawaii. He was 94…it was he, his colleagues said, who saw the future. In 1965, in what became known as Moore’s Law, he predicted that the number of transistors that could be placed on a silicon chip would double at regular intervals for the foreseeable future, thus increasing the data-processing power of computers exponentially. Read the full article.

RIP.

The Dodo - The other day, nature photographer Doug Gemmell was out with his camera when a picture-perfect opportunity began to unfold in front of him. As Gemmell looked on through his lens, a bald eagle swooped down toward the ground, talons at the ready. Though the eagle was only a juvenile, having yet to develop white feathers on her head, her hunting prowess was still on full display. The eagle’s prey that day, however, was something Gemmell did not expect. Look.

😮

What I’m Watching

Scott Galloway is a kindred spirit here. He understands it’s really about the algorithm, not primarily the data.

Excellent video commentary about Big Tech’s descent into dumbness by Ryan Broderick of Garbage Day:

I just absolutely love animation. I always have. From Saturday morning cartoons to present day. So you can imagine my delight when I discovered the Animation Obsessive Substack. Most recently, they shared this mezmerizing and gorgeous animated depiction of that classic of American literature, Ernest Hemmingway's The Old Man and the Sea. Read the full article and watch the short: