Copyrewrite

Copyright law needs an adjustment to provide the flexibility to accommodate an AI world and to get back to its original mission of enlarging the public domain; digital marketing news & MUSIC MONDAY!

Back in June, the United States Copyright Office reiterated its stance that AI—generated imagery is ineligible for copyright protections.

PetaPixel quotes Robert Kasunic of the USCO, stating “The Office will refuse to register works entirely generated by AI. Human authorship is a precondition to copyrightability.”

What, then, does “human authorship” mean?

Works that contain artificially-generated elements can be copyrighted but those AI-generated elements must be declared and are not eligible for copyright.

So, for example, say I shot my own photo of a busy downtown city scene and fed that into Midjourney as my base image but then used Midjourey to replace the background with a rolling rural fields to give it a Grant Woodsesque feel. I’d have to declare that those fields were artificially generated in my copyright application and probably also the city scene Midjourney created from my original photo.

I could copyright my admittedly crappy original photo but not the version I create via Midjourney that is based on the original.

The USCO’s stance to date renders all the imagery I’ve created for this newsletter using AI as ineligible for copyright protection.

AI Copyright Suits

The other side of this coin, of course, is the copyright infringement claims photographers, artists and celebrities like Sarah Silverman are making against AI companies for using their creative works to train their Large Language Models.

I’m definitely sensitive to those claims. I’ve been publishing a lot of original content online since 1993, including articles, photography, audio and video. No one ever asked me if they could use my content to train their AI models but I’ve no doubt they’ve done just that.

It is that sensitivity that caused me—after my initial experimentation with Midjourney—to refrain from using actual living artist names as prompts to create an image that mimics an artist’s style.

AI Transformation

A Midjourney-created entry in an art contest generated controversy last year when it won the competition.

Joseph Foley at Creative Bloq reports:

Since then, Allen has tried to copyright his prize-winning work. But this week, the US Copyright Office issued its third, final decision on the matter. It's view is that human authorship is a necessary prerequisite for copyright. And it's a decision that could impact on the popularity of AI art generators.

In his appeal, Allen had argued that an "essential element of human creativity” was needed to use Midjourney and that he had input “at least 624” text prompts before the image generator produced what he was looking for. He also argued that his work should be registered under fair doctrine because it was a transformative use of copyrighted material. “The underlying AI generated work merely constitutes raw material which Mr. Allen has transformed through his artistic contributions,” his appeal claimed.

These issues will sort themselves out in the courts but I’m inclined to agree more with the argument that generative AI is transformative, which is the key distinction applied to the Fair Use doctrine.

“transformative” uses are more likely to be considered fair. Transformative uses are those that add something new, with a further purpose or different character, and do not substitute for the original use of the work.

Benedict Evans’ excellent piece titled Generative AI and intellectual property clarifies the matter:

But the real intellectual puzzle, I think, is not that you can point ChatGPT at today’s headlines, but that on one hand all headlines are somewhere in the training data, and on the other, they’re not in the model.

OpenAI is no longer open about exactly what it uses, but even if it isn’t training on pirated books, it certainly uses some of the ‘Common Crawl, which is a sampling of a double-digit percentage of the entire web. So, your website might be in there. But the training data is not the model. LLMs are not databases. They deduce or infer patterns in language by seeing vast quantities of text created by people - we write things that contain logic and structure, and LLMs look at that and infer patterns from it, but they don’t keep it. So ChatGPT might have looked at a thousand stories from the New York Times, but it hasn’t kept them.

Moreover, those thousand stories themselves are just a fraction of a fraction of a percent of all the training data. The purpose is not for the LLM to know the content of any given story or any given novel - the purpose is for it to see the patterns in the output of collective human intelligence.

Furthermore, throughout history creative works have always been iterative. Innovations developed by one artist are adopted by subsequent artists.

The innovations of the impressionist painters influenced the style of the pointillism and expressionist painters and on to cubism and so forth.

Ernest Hemingway’s journalism-inspired fiction of short, declarative sentences and realistic dialog influenced countless writers that followed him and just as profoundly, the dialog of Hollywood movies.

Incorporating previously established styles into your creative vision is a time-honored tradition and a crucial aspect that makes innovation possible.

It’s the very definition of transformative.

AI Guided By A Human Hand

A federal judge recently ruled against Stephen Thaler, who brought suit challenging the government’s position that works made by AI are not copyrightable. According to The Hollywood Reporter:

Thaler, chief executive of neural network firm Imagination Engines. In 2018, he listed an AI system, the Creativity Machine, as the sole creator of an artwork called A Recent Entrance to Paradise, which was described as “autonomously created by a computer algorithm running on a machine.” […]

“In the absence of any human involvement in the creation of the work, the clear and straightforward answer is the one given by the Register: No,” Howell wrote.

I agree with the judge’s decision.

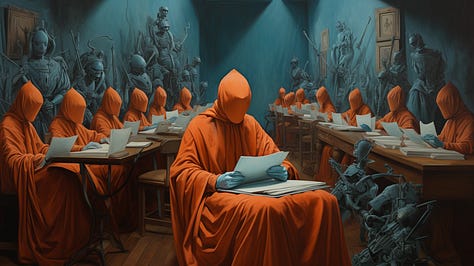

But that’s not to say I believe any AI-created work should be ineligible for copyright.

Regular readers of this newsletter will have noticed that I use Midjourney to create banner images for my articles.

The images I create using this tool should not be disqualified from copyright protection simply by virtue of the use of the tool. By that logic, any image generated using Photoshop should be disqualified.

I’m being a bit facetious here but the point is that AI is just a tool. A very powerful tool, but still just a tool. The tool needs a human hand (i.e. human creative vision) to produce anything.

When I use Midjourney to create images for these posts, I have a visual idea in mind of what I want to create. So I start with a creative vision that comes from me, not Midjourney.

Then I have to prompt Midjourney to create the image I want to produce. This is creative guidance. To get the image I want, to get the best result, I must be creative in the way I write my prompts.

I must innovate by trying different approaches to get the image I want. More creative guidance. I must iterate to refine my images. More creative guidance.

But Midjourney does all the work! Midjourney executes the image!, the naysayer amongst you might say (there’s one in every crowd, isn’t there?).

True, I’ll give you that. Yet but for me, there would be no image at all.

But you’re not creating those stunning images. All the talent is in Midjourney, the naysayer retorts.

But the talent in vision resides in me. Without my vision, there’d be no work.

I have a specific intent with the images I create using Midjourney, I have a specific artistic vision I want to achieve, I have the talent to guide the tool to execute my vision through the prompts I create, and all that results in my unique creative expression even if I did not apply brush to canvas.

Jeff Koons

So, naysayer, does this mean that Jeff Koons—the famous modern artist who conceives of an artistic vision and then directs his apprentices to create it—is not deserving of copyright protection?

No? Still not buying what I’m selling?

Try this on for size: Lila Shroff at The Reboot writes brilliantly about artists who are curating their own original datasets with which to train generative AI models so as to produce their own very specific artistic visions:

Stephanie Dinkins explores the possibilities for “small data” with “Not The Only One,” an embodied chatbot sculpture trained on the oral histories of three generations of women from a single family. In “The Zizi Show,” Jack Elwes’ deepfake drag cabaret highlights representational harms felt by queer communities. The datasets Elwes developed for the show are deliberately diverse and designed around a principle of consent. Finally, as part of a project exploring the migrational patterns of her Saudi and Iraqi ancestors, Nouf Aljowaysir’s “Salaf” explicitly investigates issues of dataset representation. When Aljowaysir performed an object-classification task on historical images of Bedouin lifestyles, the model she used routinely misidentified veiled women, confidently labeling them as “soldiers,” “army,” or other military paraphernalia. In protest, Aljowaysir used an image segmentation model to erase the misrepresentations from the archival images. She then trained a new model on the erased dataset to make visible the absent figures, signifying the “eradication of her ancestor’s collective memory.”

You cannot seriously tell me creative works produced with these AI models are not worthy of copyright.

Benedict Evans has some other examples:

At the simplest level, we will very soon have smartphone apps that let you say “play me this song, but in Taylor Swift’s voice”. That’s a new possibility, but we understand the intellectual property ideas pretty well - there’ll be a lot of shouting over who gets paid what, but we know what we think the moral rights are. Record companies are already having conversations with Google about this.

But what happens if I say “make me a song in the style of Taylor Swift” or, even more puzzling, “make me a song in the style of the top pop hits of the last decade”?

A person can’t mimic another voice perfectly (impressionists don’t have to pay licence fees) but they can listen to a thousand hours of music and make something in that style - a ‘pastiche’, we sometimes call it. If a person did that, they wouldn’t have to pay a fee to all those artists, so if we use a computer for that, do we need to pay them? I don’t think we know how we think about that. We might know what the law might say, but we might want to change that.

The Original Intent Of Copyright

Tabrez Sayed provides a nice summary of how copyright law has changed from its introduction in 1790 to the modern age, Disney and Steamboat Willie.

The original intent of copyright protection was to allow innovators to profit from their creations for a period of 14 years and another renewable period of 14 years if the creator was still alive.

Copyright protection provided a powerful incentive for innovation but after 28 years, those creations would enter the public domain, creating a base of knowledge that could contribute to subsequent innovations.

The reason for putting a time limit on copyright protections was to expand the body of publicly-available knowledge, thereby allowing for greater and greater innovation, which in turn would expand public knowledge exponentially. A virtuous circle of ever-renewing knowledge from which a nascent experiment in representative democracy would greatly benefit.

Subsequent amendments to copyright law allowed protection for a 56 year period of time (expanding the original 14 year periods of time to 28 years).

Steamboat Willie

In 1984, 56 years after Steamboat Willie was created, Disney’s original mouse was slated to enter the public domain.

Seeing this threat, Disney company lobbying resulted in the passage of the Copyright Act of 1976, allowing copyright protection for the whole life of the author plus half a century more, or 75 years if a corporation owned the creation.

This set the expiration date on Steamboat Willie at 2003, and yet Willie is still under copyright protection due to Disney’s additional efforts to get the Copyright Term Extension Act of 1998 passed, otherwise known as the “Mickey Mouse Protection Act.”

Steamboat Willie will finally enter the public domain next year but don’t be fooled into thinking that Disney has given up all rights to the character. It’s complicated, as the New York Times points out.

The long saga of Disney’s enslavement of Steamboat Willie illustrates just how far copyright law has drifted from its original mission of contributing to the public good.

Creative Commons

There is one fairly successful attempt at creating a copyright system that tries to reclaim some of that public good mission of the original.

It’s called the Creative Commons. According to the nonprofit’s website:

CC is an international nonprofit organization that empowers people to grow and sustain the thriving commons of shared knowledge and culture we need to address the world’s most pressing challenges and create a brighter future for all.

Together with our global community and multiple partners, we build capacity and infrastructure, we develop practical solutions, and we advocate for better sharing: sharing that is contextual, inclusive, just, equitable, reciprocal, and sustainable.

Creative Commons licenses are built into the infrastructure of the photo-sharing site Flickr and Wikipedia.

It allows creators to specify under what conditions and terms their content can be used.

USCO Seeks Public Comment

It should be clear by now that there is no clear-cut answer regarding the proper stance the United States Copyright Office should take on protection of AI-generated intellectual property.

Recognizing this, late last month the USCO asked for public input on the issues of AI generated content and copyright protection.

Benj Edwards at Ars Technica reports:

Specifically, the Copyright Office is interested in four main areas: the use of copyrighted materials to train AI models and whether this constitutes infringement; the extent to which AI-generated content should or could be copyrighted, particularly when a human has exercised some degree of control over the AI model’s operations; how liability should be applied if AI-generated content infringes on existing copyrights; and the impact of AI mimicking voices or styles of human artists, which, while not strictly copyright issues, may engage state laws related to publicity rights and unfair competition.

The deadline for written comments is October 18 and the deadline for reply comments is November 15. You can submit your comments at Copyright.gov.

Digital News Articles

Artificial Intelligence

Wired by Steven Levy - What OpenAI Really Wants - For Altman and his company, ChatGPT and GPT-4 are merely stepping stones along the way to achieving a simple and seismic mission, one these technologists may as well have branded on their flesh. That mission is to build artificial general intelligence—a concept that’s so far been grounded more in science fiction than science—and to make it safe for humanity. The people who work at OpenAI are fanatical in their pursuit of that goal. (Though, as any number of conversations in the office café will confirm, the “build AGI” bit of the mission seems to offer up more raw excitement to its researchers than the “make it safe” bit.) These are people who do not shy from casually using the term “super-intelligence.” They assume that AI’s trajectory will surpass whatever peak biology can attain. The company’s financial documents even stipulate a kind of exit contingency for when AI wipes away our whole economic system.

A really good but long read about the history of OpenAI and the motivations of its employees.

New York Times by Tiffany Hsu - What Can You Do When A.I. Lies About You? - A.I. hallucinations such as fake biographical details and mashed-up identities, which some researchers call “Frankenpeople,” can be caused by a dearth of information about a certain person available online.

The technology’s reliance on statistical pattern prediction also means that most chatbots join words and phrases that they recognize from training data as often being correlated. That is likely how ChatGPT awarded Ellie Pavlick, an assistant professor of computer science at Brown University, a number of awards in her field that she did not win.

Same thing happened to me, as I explained in June👇.

This is the opposite of the Discoverability Crisis I wrote about last month; it’s being discovered but inaccurately.

The correlation of data with a specific person, organization, or brand is a new wrinkle that must be taken into account for reputation management practices.

AI Incident Database - Dedicated to indexing the collective history of harms or near harms realized in the real world by the deployment of artificial intelligence systems. Like similar databases in aviation and computer security, the AI Incident Database aims to learn from experience so we can prevent or mitigate bad outcomes.

Great resource.

The Verge by Mia Sato - Can news outlets build a ‘trustworthy’ AI chatbot? - a group of tech outlets is attempting to incorporate generative AI into its websites, though readers won’t find a machine’s byline anytime soon. On August 1st, an AI chatbot tool was added to Macworld, PCWorld, Tech Advisor, and TechHive, promising that readers can “get [their] tech questions answered by AI, based only on stories and reviews by our experts.”

The AI chatbot, dubbed Smart Answers, appears across nearly all articles and on the homepages of the sites, which are owned by media / marketing company Foundry. Smart Answers is trained only on the corpus of English language articles from the four sites and excludes sponsored content and deals posts. The user experience is similar to other consumer tools like ChatGPT: readers type in a question, and Smart Answers spits out a response. Alternatively, readers can select a query from an FAQ list, which is AI-generated but based on what people are asking and clicking on. Smart Answers responses include links to the articles from which information was pulled.

I was wondering when this would happen. I’d love to have enough time to train a LLM on all my writing so I could have a chatbot through which I could have a conversation with the content I’ve created over the years.

MIT Technology Review by Will Douglas Heaven - DeepMind’s cofounder: Generative AI is just a phase. What’s next is interactive AI. - DeepMind cofounder Mustafa Suleyman wants to build a chatbot that does a whole lot more than chat. In a recent conversation I had with him, he told me that generative AI is just a phase. What’s next is interactive AI: bots that can carry out tasks you set for them by calling on other software and other people to get stuff done. He also calls for robust regulation—and doesn’t think that’ll be hard to achieve.

Suleyman is not the only one talking up a future filled with ever more autonomous software. But unlike most people he has a new billion-dollar company, Inflection, with a roster of top-tier talent plucked from DeepMind, Meta, and OpenAI, and—thanks to a deal with Nvidia—one of the biggest stockpiles of specialized AI hardware in the world. Suleyman has put his money—which he tells me he both isn't interested in and wants to make more of—where his mouth is.

Interesting interview.

One Useful Thing by Ethan Mollick - Centaurs and Cyborgs on the Jagged Frontier - For the last several months, I been part of a team of social scientists working with Boston Consulting Group, turning their offices into the largest pre-registered experiment on the future of professional work in our AI-haunted age. Our first working paper is out today. There is a ton of important and useful nuance in the paper but let me tell you the headline first: for 18 different tasks selected to be realistic samples of the kinds of work done at an elite consulting company, consultants using ChatGPT-4 outperformed those who did not, by a lot. On every dimension. Every way we measured performance.

Consultants using AI finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without. Those are some very big impacts. Now, let’s add in the nuance.

Excellent piece! Must read for anyone considering implementing AI within their organization and also as a framework for using AI individually.

Happy Hour

Me - The Quest For The Perfect Spicy Margarita - My shaker is a fine object to behold. Known as a “cobbler shaker,” it is a tall, sleek object of gleaming stainless steel that sits upon an accented copper base. Seen from the ground level, it would be perfectly at home as an obelisk-esque object in a Kubrick film.

This is my finely-tuned recipe for a spicy margarita.

Podcasting

TechCrunch by Sarah Perez - YouTube to support RSS uploads for podcasters by year-end, plus private feeds in YouTube Music - the platform will be rolling out support for RSS uploads for podcasters by the end of the year, among other updates. The new functionality had been in beta testing since earlier this year, as a strategic, invite-only pilot.

YouTube additionally confirmed to TechCrunch it’s rolling out support for podcasts on YouTube Music by the end of year, as well.

Another channel with massive potential to grow your podcasting audience.

Social Media

New York Times by Ezra Klein - Elon Musk Got Twitter Because He Gets Twitter - Jack Dorsey, a Twitter co-founder and former chief executive, always wanted it to be something else. Something it wasn’t, and couldn’t be. “The purpose of Twitter is to serve the public conversation,” he said in 2018. Twitter began “measuring conversational health” and trying to tweak the platform to burnish it. Sincere as the effort was, it was like those liquor ads advising moderation. You don’t get people to drink less by selling them whiskey. Similarly, if your intention was to foster healthy conversation, you’d never limit thoughts to 280 characters or add like and retweet buttons or quote-tweet features. Twitter can’t be a place to hold healthy conversation because that’s not what it’s built to do.

So what is Twitter built to do? It’s built to gamify conversation. As C. Thi Nguyen, a philosopher at the University of Utah, has written, it does that “by offering immediate, vivid and quantified evaluations of one’s conversational success. Twitter offers us points for discourse; it scores our communication. And these gamelike features are responsible for much of Twitter’s psychological wallop. Twitter is addictive, in part, because it feels so good to watch those numbers go up and up.”

A precise diagnosis of effect Twitter has had on our public, and our public policy, discussions. Gamification is no way to shape public policy.

BGR by Joe Wituschek - X officially takes on LinkedIn with its new job posting feature - an app researcher found indications that X (formerly known as Twitter) was looking to take on Microsoft’s social media network for professionals by bringing job postings to its platform. At the time (when X was actually still Twitter), it appeared that the feature would allow verified organizations to integrate job postings from an Applicant Tracking System or XML feed into their Twitter profile.

Sure, if you want to hire fascists.

TechCrunch by Sarah Perez - YouTube demystifies the Shorts algorithm, views and answers other creator questions - According to the product lead for Shorts, Todd Sherman, the Shorts algorithm differs from the long-form algorithm where people are tapping on videos to watch — essentially making a specific choice that then drives more recommendations. But on Shorts, people are swiping through content not knowing what comes next. While both recommendation systems are designed to present videos that people will value and enjoy, the Shorts feed prioritizes a more diverse feed because people are flipping through hundreds of videos versus maybe 10 or 20 in long-form.

Sherman also noted that not every flip in Shorts is counted as a view, either — a difference from some other platforms where viewing the first frame is counted as a view. (TikTok counts views as soon as a video starts to play, it’s said). On Shorts, however, the view is meant to reflect that the user had some intent to watch so creators have some “meaningful threshold” that someone meant to watch the view. […]

“There are parts of the algorithm that try and find people, find creators an audience,” Sherman explained. “And sometimes those algorithms will go and effectively find like a seed audience, find a set of people that may enjoy your video. And depending on how that goes, it may get a lot more traffic or it may taper off,” he said.

This last graph illustrates how digital platforms interpret the reputations of their users, in this case the users’ video consumption reputations.

TechCrunch by Ivan Mehta - X launches Community Notes for videos - Social network X, formerly known as Twitter, has introduced Community Notes for videos. Community Notes is an existing program for crowdsourced moderation. The Elon Musk-owned platform announced that notes by contributors attached to a video will show up in all posts with that video.

“Notes written on videos will automatically show on other posts containing matching videos. A highly-scalable way of adding context to edited clips, AI-generated videos, and more,” the company said in a post.

This is actually a pretty clever innovation. I’d like to see other platforms adopt it because Twitter/X has become a fascist cesspool.

Web Development

WordPress - Introducing the 100-Year Plan: Secure Your Online Legacy for a Century - The 100-Year Plan isn’t just about today. It’s an investment in tomorrow. Whether you’re cementing your own digital legacy or gifting 100 years of a trusted platform to a loved one, this plan is a testament to the future’s boundless potential.

The cost is $38,000.

Not a surprise at all. This was inevitable.

TechCrunch by Sarah Perez - Inalife’s digital legacy platform lets you preserve your family’s memories - A new startup, Inalife, has created a digital legacy platform that aims to help families preserve their precious memories, create an interactive family tree and even record messages for other members to be played at a date in the future — like when a new baby grows up and reaches adulthood, for example.

Are we seeing a trend here?

wpbeginner - What Is Google’s INP Score and How to Improve It in WordPress - Google Core Web Vitals are website performance metrics that Google considers important for overall user experience. These web vital scores are part of Google’s overall page experience score, which will impact your SEO rankings. […]

INP stands for ‘Interaction to Next Paint’. It is a new Google Core Web Vital metric that measures the user interactions that cause delays on your website.

The INP test measures how long it takes between a user interacting with your website, like clicking on something, and your content visually updating in response. This visual update is called the ‘next paint’.

This is a user experience reputation metric.

Music Monday

Girl Talk: Copyright infringement or transformative fair use?

Glorious Midjourney Mistakes

The banner images I created for this article but ended up on the cutting room floor.

This is a great piece of work! Nice compendium. One thing that strikes me as either deliberately or accidentally genius about the current posture on AI copyright is that it stands to help avoid the worst fears of the Writers Guild and SAG. While certainly a lot of small YouTube-style content producers may not care that they can’t enforce IP protection on their AI generated posts, it seems certain that the likes of Disney and other studios will want to own the rights to their films and tv shows. That means, by extension, they are going to need human-made scripts and performances. Nice side effect.